Управление памятью

С помощью сегментации и пэйджинга линукс производит перевод логических адресов в физические.

Ядро использует одну и ту же область памяти как для хранения своего кода,

так и для своих статических структур данных.

Остальная часть памяти называется динамической.

Это область памяти нужна не только для создания новых процессов,но и для самого ядра.

Производительность системы зависит от того,насколько эффективно управляется такая память.

Современные операционные системы делают все возможное,

чтобы оптимизировать ее использование.

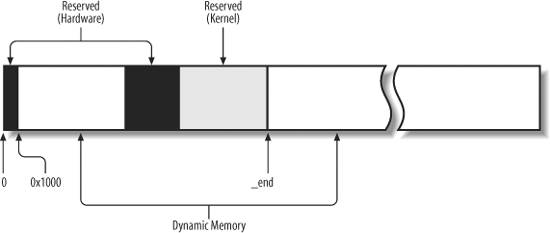

Рисунок 8-1 показывает использование page frames.

В этой части описано,как ядро выделяет память для своих собственных нужд.

Разделы "Page Frame Management" и

"Memory Area Management"

описывают 2 различных техники для выделения непрерывных цельных кусков памяти,

в то время как секция "Noncontiguous Memory Area Management"

описывает 3-ю технику для управления несмежными областями памяти.

Будут описаны такие темы как memory zones, kernel mappings, buddy system, slab cache, memory pools.

Управление фреймами памяти

We saw in the section "Paging in Hardware" in Chapter 2 how the Intel Pentium processor can use two different page frame sizes: 4 KB and 4 MB (or 2 MB if PAE is enabledsee the section "The Physical Address Extension (PAE) Paging Mechanism" in Chapter 2). Linux adopts the smaller 4 KB page frame size as the standard memory allocation unit. This makes things simpler for two reasons:

The Page Fault

exceptions issued by the paging circuitry are easily interpreted. Either the page requested exists but the process is not allowed to address it, or the page does not exist. In the second case, the memory allocator must find a free

4 KB page frame and assign it to the process. Although both 4 KB and 4 MB are multiples of all disk block sizes, transfers of data between main memory and disks are in most cases more efficient when the smaller size is used.

8.1.1. Page Descriptors

The kernel must keep track of the current status of each page frame. For instance, it must be able to distinguish the page frames that are used to contain pages that belong to processes from those that contain kernel code or kernel data structures. Similarly, it must be able to determine whether a page frame in dynamic memory is free. A page frame in dynamic memory is free if it does not contain any useful data. It is not free when the page frame contains data of a User Mode process, data of a software cache, dynamically allocated kernel data structures, buffered data of a device driver, code of a kernel module, and so on.

State information of a page frame is kept in a page descriptor of type page, whose fields are shown in Table 8-1. All page descriptors

are stored in the mem_map array. Because each descriptor is 32 bytes long, the space required by mem_map is slightly less than 1% of the whole RAM. The virt_to_page(addr) macro yields the address of the page descriptor associated with the linear address addr. The pfn_to_page(pfn) macro yields the address of the page descriptor associated with the page frame having number pfn.

Table 8-1. The fields of the page descriptorType | Name | Description |

|---|

unsigned long | flags | Array of flags (see Table 8-2). Also encodes the zone number to which the page frame belongs. | atomic_t | _count | Page frame's reference counter. | atomic_t | _mapcount | Number of Page Table entries that refer to the page frame (-1 if none). | unsigned long | private | Available to the kernel component that is using the page (for instance, it is a buffer head pointer in case of buffer page; see "Block Buffers and Buffer Heads" in Chapter 15). If the page is free, this field is used by the buddy system (see later in this chapter). | struct address_space * | mapping | Used when the page is inserted into the page cache (see the section "The Page Cache" in Chapter 15), or when it belongs to an anonymous region (see the section "Reverse Mapping for Anonymous Pages" in Chapter 17). | unsigned long | index | Used by several kernel components with different meanings. For instance, it identifies the position of the data stored in the page frame within the page's disk image or within an anonymous region (Chapter 15), or it stores a swapped-out page identifier (Chapter 17). | struct list_head | lru | Contains pointers to the least recently used doubly linked list of pages. |

You don't have to fully understand the role of all fields in the page descriptor right now. In the following chapters, we often come back to the fields of the page descriptor. Moreover, several fields have different meaning, according to whether the page frame is free or what kernel component is using the page frame.

Let's describe in greater detail two of the fields:

_count A usage reference counter for the page. If it is set to -1, the corresponding page frame is free and can be assigned to any process or to the kernel itself. If it is set to a value greater than or equal to 0, the page frame is assigned to one or more processes or is used to store some kernel data structures. The page_count( ) function returns the value of the _count field increased by one, that is, the number of users of the page.

flags Includes up to 32 flags (see Table 8-2) that describe the status of the page frame. For each PG_xyz flag, the kernel defines some macros that manipulate its value. Usually, the PageXyz macro returns the value of the flag, while the SetPageXyz and ClearPageXyz macro set and clear the corresponding bit, respectively.

Table 8-2. Flags describing the status of a page frameFlag name | Meaning |

|---|

PG_locked | The page is locked; for instance, it is involved in a disk I/O operation. | PG_error | An I/O error occurred while transferring the page. | PG_referenced | The page has been recently accessed. | PG_uptodate | This flag is set after completing a read operation, unless a disk I/O error happened. | PG_dirty | The page has been modified (see the section "Implementing the PFRA" in Chapter 17). | PG_lru | The page is in the active or inactive page list (see the section "The Least Recently Used (LRU) Lists" in Chapter 17). | PG_active | The page is in the active page list (see the section "The Least Recently Used (LRU) Lists" in Chapter 17). | PG_slab | The page frame is included in a slab (see the section "Memory Area Management" later in this chapter). | PG_highmem | The page frame belongs to the ZONE_HIGHMEM zone (see the following section "Non-Uniform Memory Access (NUMA)"). | PG_checked | Used by some filesystems such as Ext2 and Ext3 (see Chapter 18). | PG_arch_1 | Not used on the 80 x 86 architecture. | PG_reserved | The page frame is reserved for kernel code or is unusable. | PG_private | The private field of the page descriptor stores meaningful data. | PG_writeback | The page is being written to disk by means of the writepage method (see Chapter 16) . | PG_nosave | Used for system suspend/resume. | PG_compound | The page frame is handled through the extended paging mechanism (see the section "Extended Paging" in Chapter 2). | PG_swapcache | The page belongs to the swap cache (see the section "The Swap Cache" in Chapter 17). | PG_mappedtodisk | All data in the page frame corresponds to blocks allocated on disk. | PG_reclaim | The page has been marked to be written to disk in order to reclaim memory. | PG_nosave_free | Used for system suspend/resume. |

8.1.2. Non-Uniform Memory Access (NUMA)

We are used to thinking of the computer's memory as a homogeneous, shared resource. Disregarding the role of the hardware caches, we expect the time required for a CPU to access a memory location to be essentially the same, regardless of the location's physical address and the CPU. Unfortunately, this assumption is not true in some architectures. For instance, it is not true for some multiprocessor Alpha or MIPS computers.

Linux 2.6 supports the Non-Uniform Memory Access (NUMA) model, in which the access times for different memory locations from a given CPU may vary. The physical memory of the system is partitioned in several nodes

. The time needed by a given CPU to access pages within a single node is the same. However, this time might not be the same for two different CPUs. For every CPU, the kernel tries to minimize the number of accesses to costly nodes by carefully selecting where the kernel data structures that are most often referenced by the CPU are stored.

The physical memory inside each node can be split into several zones, as we will see in the next section. Each node has a descriptor of type pg_data_t, whose fields are shown in Table 8-3. All node descriptors are stored in a singly linked list, whose first element is pointed to by the pgdat_list variable.

Table 8-3. The fields of the node descriptorType | Name | Description |

|---|

struct zone [ ] | node_zones | Array of zone descriptors of the node | struct zonelist [ ] | node_zonelists | Array of zonelist data structures used by the page allocator (see the later section "Memory Zones") | int | nr_zones | Number of zones in the node | struct page * | node_mem_map | Array of page descriptors of the node | struct bootmem_data * | bdata | Used in the kernel initialization phase | unsigned long | node_start_pfn | Index of the first page frame in the node | unsigned long | node_present_pages | Size of the memory node, excluding holes (in page frames) | unsigned long | node_spanned_pages | Size of the node, including holes (in page frames) | int | node_id | Identifier of the node | pg_data_t * | pgdat_next | Next item in the memory node list | wait_queue_head_t | kswapd_wait | Wait queue for the kswapd

pageout daemon (see the section "Periodic Reclaiming" in Chapter 17) | struct task_struct * | kswapd | Pointer to the process descriptor of the kswapd kernel thread | int | kswapd_max_order | Logarithmic size of free blocks to be created by kswapd |

As usual, we are mostly concerned with the 80 x 86 architecture. IBM-compatible PCs use the Uniform Memory Access model (UMA), thus the NUMA support is not really required. However, even if NUMA support is not compiled in the kernel, Linux makes use of a single node that includes all system physical memory. Thus, the pgdat_list variable points to a list consisting of a single elementthe node 0 descriptorstored in the contig_page_data variable.

On the 80 x 86 architecture, grouping the physical memory in a single node might appear useless; however, this approach makes the memory handling code more portable, because the kernel can assume that the physical memory is partitioned in one or more nodes in all architectures.

8.1.3. Memory Zones

In an ideal computer architecture, a page frame is a memory storage unit that can be used for anything: storing kernel and user data, buffering disk data, and so on. Every kind of page of data can be stored in a page frame, without limitations.

However, real computer architectures have hardware constraints that may limit the way page frames can be used. In particular, the Linux kernel must deal with two hardware constraints of the 80 x 86 architecture:

The Direct Memory Access (DMA) processors for old ISA buses have a strong limitation: they are able to address only the first 16 MB of RAM. In modern 32-bit computers with lots of RAM, the CPU cannot directly access all physical memory because the linear address space is too small.

To cope with these two limitations, Linux 2.6 partitions the physical memory of every memory node into three zones. In the 80 x 86 UMA architecture the zones are:

ZONE_DMA Contains page frames of memory below 16 MB

ZONE_NORMAL Contains page frames of memory at and above 16 MB and below 896 MB

ZONE_HIGHMEM Contains page frames of memory at and above 896 MB

The ZONE_DMA zone includes page frames that can be used by old ISA-based devices by means of the DMA. (The section "Direct Memory Access (DMA)" in Chapter 13 gives further details on DMA.)

The ZONE_DMA and ZONE_NORMAL zones include the "normal" page frames that can be directly accessed by the kernel through the linear mapping in the fourth gigabyte of the linear address space (see the section "Kernel Page Tables" in Chapter 2). Conversely, the ZONE_HIGHMEM zone includes page frames that cannot be directly accessed by the kernel through the linear mapping in the fourth gigabyte of linear address space (see the section "Kernel Mappings of High-Memory Page Frames" later in this chapter). The ZONE_HIGHMEM zone is always empty on 64-bit architectures.

Each memory zone has its own descriptor of type zone. Its fields are shown in Table 8-4.

Table 8-4. The fields of the zone descriptorType | Name | Description |

|---|

unsigned long | free_pages | Number of free pages in the zone. | unsigned long | pages_min | Number of reserved pages of the zone (see the section "The Pool of Reserved Page Frames" later in this chapter). | unsigned long | pages_low | Low watermark for page frame reclaiming; also used by the zone allocator as a threshold value (see the section "The Zone Allocator" later in this chapter). | unsigned long | pages_high | High watermark for page frame reclaiming; also used by the zone allocator as a threshold value. | unsigned long [] | lowmem_reserve | Specifies how many page frames in each zone must be reserved for handling low-on-memory critical situations. | struct per_cpu_pageset[] | pageset | Data structure used to implement special caches of single page frames (see the section "The Per-CPU Page Frame Cache" later in this chapter). | spinlock_t | lock | Spin lock protecting the descriptor. | struct free_area [] | free_area | Identifies the blocks of free page frames in the zone (see the section "The Buddy System Algorithm" later in this chapter). | spinlock_t | lru_lock | Spin lock for the active and inactive lists. | struct list head | active_list | List of active pages in the zone (see Chapter 17). | struct list head | inactive_list | List of inactive pages in the zone (see Chapter 17). | unsigned long | nr_scan_active | Number of active pages to be scanned when reclaiming memory (see the section "Low On Memory Reclaiming" in Chapter 17). | unsigned long | nr_scan_inactive | Number of inactive pages to be scanned when reclaiming memory. | unsigned long | nr_active | Number of pages in the zone's active list. | unsigned long | nr_inactive | Number of pages in the zone's inactive list. | unsigned long | pages_scanned | Counter used when doing page frame reclaiming in the zone. | int | all_unreclaimable | Flag set when the zone is full of unreclaimable pages. | int | temp_priority | Temporary zone's priority (used when doing page frame reclaiming). | int | prev_priority | Zone's priority ranging between 12 and 0 (used by the page frame reclaiming algorithm, see the section "Low On Memory Reclaiming" in Chapter 17). | wait_queue_head_t * | wait_table | Hash table of wait queues of processes waiting for one of the pages of the zone. | unsigned long | wait_table_size | Size of the wait queue hash table. | unsigned long | wait_table_bits | Power-of-2 order of the size of the wait queue hash table array. | struct pglist_data * | zone_pgdat | Memory node (see the earlier section "Non-Uniform Memory Access (NUMA)"). | struct page * | zone_mem_map | Pointer to first page descriptor of the zone. | unsigned long | zone_start_pfn | Index of the first page frame of the zone. | unsigned long | spanned_pages | Total size of zone in pages, including holes. | unsigned long | present_pages | Total size of zone in pages, excluding holes. | char * | name | Pointer to the conventional name of the zone: "DMA," "Normal," or "HighMem." |

Many fields of the zone structure are used for page frame reclaiming and will be described in Chapter 17.

Each page descriptor has links to the memory node and to the zone inside the node that includes the corresponding page frame. To save space, these links are not stored as classical pointers; rather, they are encoded as indices stored in the high bits of the flags field. In fact, the number of flags that characterize a page frame is limited, thus it is always possible to reserve the most significant bits of the flags field to encode the proper memory node and zone number. The page_zone( ) function receives as its parameter the address of a page descriptor; it reads the most significant bits of the flags field in the page descriptor, then it determines the address of the corresponding zone descriptor by looking in the zone_table array. This array is initialized at boot time with the addresses of all zone descriptors of all memory nodes.

When the kernel invokes a memory allocation function, it must specify the zones that contain the requested page frames. The kernel usually specifies which zones it's willing to use. For instance, if a page frame must be directly mapped in the fourth gigabyte of linear addresses but it is not going to be used for ISA DMA transfers, then the kernel requests a page frame either in ZONE_NORMAL or in ZONE_DMA. Of course, the page frame should be obtained from ZONE_DMA only if ZONE_NORMAL does not have free page frames. To specify the preferred zones in a memory allocation request, the kernel uses the zonelist data structure, which is an array of zone descriptor pointers.

8.1.4. The Pool of Reserved Page Frames

Memory allocation requests can be satisfied in two different ways. If enough free memory is available, the request can be satisfied immediately. Otherwise, some memory reclaiming must take place, and the kernel control path that made the request is blocked until additional memory has been freed.

However, some kernel control paths cannot be blocked while requesting memorythis happens, for instance, when handling an interrupt or when executing code inside a critical region. In these cases, a kernel control path should issue atomic memory allocation requests (using the GFP_ATOMIC flag; see the later section "The Zoned Page Frame Allocator"). An atomic request never blocks: if there are not enough free pages, the allocation simply fails.

Although there is no way to ensure that an atomic memory allocation request never fails, the kernel tries hard to minimize the likelihood of this unfortunate event. In order to do this, the kernel reserves a pool of page frames for atomic memory allocation requests to be used only on low-on-memory conditions.

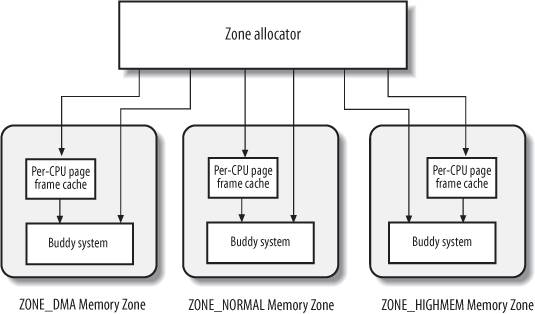

The amount of the reserved memory (in kilobytes) is stored in the min_free_kbytes variable. Its initial value is set during kernel initialization and depends on the amount of physical memory that is directly mapped in the kernel's fourth gigabyte of linear addressesthat is, it depends on the number of page frames included in the ZONE_DMA and ZONE_NORMAL memory zones:

However, initially min_free_kbytes cannot be lower than 128 and greater than 65,536.

The ZONE_DMA and ZONE_NORMAL memory zones contribute to the reserved memory with a number of page frames proportional to their relative sizes. For instance, if the ZONE_NORMAL zone is eight times bigger than ZONE_DMA, seven-eighths of the page frames will be taken from ZONE_NORMAL and one-eighth from ZONE_DMA.

The pages_min field of the zone descriptor stores the number of reserved page frames inside the zone. As we'll see in Chapter 17, this field plays also a role for the page frame reclaiming algorithm, together with the pages_low and pages_high fields. The pages_low field is always set to 5/4 of the value of pages_min, and pages_high is always set to 3/2 of the value of pages_min.

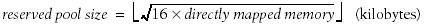

8.1.5. The Zoned Page Frame Allocator

The kernel subsystem that handles the memory allocation requests for groups of contiguous page frames is called the zoned page frame allocator

. Its main components are shown in Figure 8-2.

The component named "zone allocator

" receives the requests for allocation and deallocation of dynamic memory. In the case of allocation requests, the component searches a memory zone that includes a group of contiguous page frames that can satisfy the request (see the later section "The Zone Allocator"). Inside each zone, page frames are handled by a component named "buddy system

" (see the later section "The Buddy System Algorithm"). To get better system performance, a small number of page frames are kept in cache to quickly satisfy the allocation requests for single page frames (see the later section "The Per-CPU Page Frame Cache").

8.1.5.1. Requesting and releasing page frames

Page frames can be requested by using six slightly different functions and macros. Unless otherwise stated, they return the linear address of the first allocated page or return NULL if the allocation failed.

alloc_pages(gfp_mask, order) Macro used to request 2order contiguous page frames. It returns the address of the descriptor of the first allocated page frame or returns NULL if the allocation failed.

alloc_page(gfp_mask) Macro used to get a single page frame; it expands to:

alloc_pages(gfp_mask, 0)

It returns the address of the descriptor of the allocated page frame or returns NULL if the allocation failed.

_ _get_free_pages(gfp_mask, order) Function that is similar to alloc_pages( ), but it returns the linear address of the first allocated page.

_ _get_free_page(gfp_mask) Macro used to get a single page frame; it expands to:

_ _get_free_pages(gfp_mask, 0)

get_zeroed_page(gfp_mask) Function used to obtain a page frame filled with zeros; it invokes:

alloc_pages(gfp_mask | _ _GFP_ZERO, 0)

and returns the linear address of the obtained page frame.

_ _get_dma_pages(gfp_mask, order) Macro used to get page frames suitable for DMA; it expands to:

_ _get_free_pages(gfp_mask | _ _GFP_DMA, order)

The parameter gfp_mask is a group of flags that specify how to look for free page frames. The flags that can be used in gfp_mask are shown in Table 8-5.

Table 8-5. Flag used to request page framesFlag | Description |

|---|

_ _GFP_DMA | The page frame must belong to the ZONE_DMA memory zone. Equivalent to GFP_DMA. | _ _GFP_HIGHMEM | The page frame may belong to the ZONE_HIGHMEM memory zone. | _ _GFP_WAIT | The kernel is allowed to block the current process waiting for free page frames. | _ _GFP_HIGH | The kernel is allowed to access the pool of reserved page frames. | _ _GFP_IO | The kernel is allowed to perform I/O transfers on low memory pages in order to free page frames. | _ _GFP_FS | If clear, the kernel is not allowed to perform filesystem-dependent operations. | _ _GFP_COLD | The requested page frames may be "cold" (see the later section "The Per-CPU Page Frame Cache"). | _ _GFP_NOWARN | A memory allocation failure will not produce a warning message. | _ _GFP_REPEAT | The kernel keeps retrying the memory allocation until it succeeds. | _ _GFP_NOFAIL | Same as _ _GFP_REPEAT. | _ _GFP_NORETRY | Do not retry a failed memory allocation. | _ _GFP_NO_GROW | The slab allocator does not allow a slab cache to be enlarged (see the later section "The Slab Allocator"). | _ _GFP_COMP | The page frame belongs to an extended page (see the section "Extended Paging" in Chapter 2). | _ _GFP_ZERO | The page frame returned, if any, must be filled with zeros. |

In practice, Linux uses the predefined combinations of flag values shown in Table 8-6; the group name is what you'll encounter as the argument of the six page frame allocation functions.

Table 8-6. Groups of flag values used to request page framesGroup name | Corresponding flags |

|---|

GFP_ATOMIC | _ _GFP_HIGH | GFP_NOIO | _ _GFP_WAIT | GFP_NOFS | _ _GFP_WAIT | _ _GFP_IO | GFP_KERNEL | _ _GFP_WAIT | _ _GFP_IO | _ _GFP_FS | GFP_USER | _ _GFP_WAIT | _ _GFP_IO | _ _GFP_FS | GFP_HIGHUSER | _ _GFP_WAIT | _ _GFP_IO | _ _GFP_FS | _ _GFP_HIGHMEM |

The _ _GFP_DMA and _ _GFP_HIGHMEM flags are called zone modifiers

; they specify the zones searched by the kernel while looking for free page frames. The node_zonelists field of the contig_page_data node descriptor is an array of lists of zone descriptors representing the fallback zones: for each setting of the zone modifiers, the corresponding list includes the memory zones that could be used to satisfy the memory allocation request in case the original zone is short on page frames. In the 80 x 86 UMA architecture, the fallback zones are the following:

If the _ _GFP_DMA flag is set, page frames can be taken only from the ZONE_DMA memory zone. Otherwise, if the _ _GFP_HIGHMEM flag is not set, page frames can be taken only from the ZONE_NORMAL and the ZONE_DMA memory zones, in order of preference. Otherwise (the _ _GFP_HIGHMEM flag is set), page frames can be taken from ZONE_HIGHMEM, ZONE_NORMAL, and ZONE_DMA memory zones, in order of preference.

Page frames can be released through each of the following four functions and macros:

_ _free_pages(page, order) This function checks the page descriptor pointed to by page; if the page frame is not reserved (i.e., if the PG_reserved flag is equal to 0), it decreases the count field of the descriptor. If count becomes 0, it assumes that 2order contiguous page frames starting from the one corresponding to page are no longer used. In this case, the function releases the page frames as explained in the later section "The Zone Allocator."

free_pages(addr, order) This function is similar to _ _free_pages( ), but it receives as an argument the linear address addr of the first page frame to be released.

_ _free_page(page) This macro releases the page frame having the descriptor pointed to by page; it expands to:

_ _free_pages(page, 0)

free_page(addr) This macro releases the page frame having the linear address addr; it expands to:

free_pages(addr, 0)

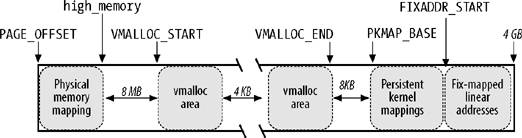

8.1.6. Kernel Mappings of High-Memory Page Frames

The linear address that corresponds to the end of the directly mapped physical memory, and thus to the beginning of the high memory, is stored in the high_memory variable, which is set to 896 MB. Page frames above the 896 MB boundary are not generally mapped in the fourth gigabyte of the kernel linear address spaces, so the kernel is unable to directly access them. This implies that each page allocator function that returns the linear address of the assigned page frame doesn't work for high-memory

page frames, that is, for page frames in the ZONE_HIGHMEM memory zone.

For instance, suppose that the kernel invoked _ _get_free_pages(GFP_HIGHMEM,0) to allocate a page frame in high memory. If the allocator assigned a page frame in high memory, _ _get_free_pages( ) cannot return its linear address because it doesn't exist; thus, the function returns NULL. In turn, the kernel cannot use the page frame; even worse, the page frame cannot be released because the kernel has lost track of it.

This problem does not exist on 64-bit hardware platforms, because the available linear address space is much larger than the amount of RAM that can be installedin short, the ZONE_HIGHMEM zone of these architectures is always empty. On 32-bit platforms such as the 80 x 86 architecture, however, Linux designers had to find some way to allow the kernel to exploit all the available RAM, up to the 64 GB supported by PAE. The approach adopted is the following:

The allocation of high-memory page frames is done only through the alloc_pages( ) function and its alloc_page( ) shortcut. These functions do not return the linear address of the first allocated page frame, because if the page frame belongs to the high memory, such linear address simply does not exist. Instead, the functions return the linear address of the page descriptor of the first allocated page frame. These linear addresses always exist, because all page descriptors are allocated in low memory once and forever during the kernel initialization. Page frames in high memory that do not have a linear address cannot be accessed by the kernel. Therefore, part of the last 128 MB of the kernel linear address space is dedicated to mapping high-memory page frames. Of course, this kind of mapping is temporary, otherwise only 128 MB of high memory would be accessible. Instead, by recycling linear addresses the whole high memory can be accessed, although at different times.

The kernel uses three different mechanisms to map page frames in high memory; they are called permanent kernel mapping, temporary kernel mapping, and noncontiguous memory allocation. In this section, we'll cover the first two techniques; the third one is discussed in the section "Noncontiguous Memory Area Management" later in this chapter.

Establishing a permanent kernel mapping may block the current process; this happens when no free Page Table entries exist that can be used as "windows" on the page frames in high memory. Thus, a permanent kernel mapping cannot be established in interrupt handlers and deferrable functions. Conversely, establishing a temporary kernel mapping never requires blocking the current process; its drawback, however, is that very few temporary kernel mappings can be established at the same time.

A kernel control path that uses a temporary kernel mapping must ensure that no other kernel control path is using the same mapping. This implies that the kernel control path can never block, otherwise another kernel control path might use the same window to map some other high memory page.

Of course, none of these techniques allow addressing the whole RAM simultaneously. After all, less than 128 MB of linear address space are left for mapping the high memory, while PAE supports systems having up to 64 GB of RAM.

8.1.6.1. Permanent kernel mappings

Permanent kernel mappings allow the kernel to establish long-lasting mappings of high-memory page frames into the kernel address space. They use a dedicated Page Table in the master kernel page tables

. The pkmap_page_table variable stores the address of this Page Table, while the LAST_PKMAP macro yields the number of entries. As usual, the Page Table includes either 512 or 1,024 entries, according to whether PAE is enabled or disabled (see the section "The Physical Address Extension (PAE) Paging Mechanism" in Chapter 2); thus, the kernel can access at most 2 or 4 MB of high memory at once.

The Page Table maps the linear addresses starting from PKMAP_BASE. The pkmap_count array includes LAST_PKMAP counters, one for each entry of the pkmap_page_table Page Table. We distinguish three cases:

The counter is 0 The corresponding Page Table entry does not map any high-memory page frame and is usable.

The counter is 1 The corresponding Page Table entry does not map any high-memory page frame, but it cannot be used because the corresponding TLB entry has not been flushed since its last usage.

The counter is n (greater than 1) The corresponding Page Table entry maps a high-memory page frame, which is used by exactly n - 1 kernel components.

To keep track of the association between high memory page frames and linear addresses induced by permanent kernel mappings

, the kernel makes use of the page_address_htable hash table. This table contains one page_address_map data structure for each page frame in high memory that is currently mapped. In turn, this data structure contains a pointer to the page descriptor and the linear address assigned to the page frame.

The page_address( ) function returns the linear address associated with the page frame, or NULL if the page frame is in high memory and is not mapped. This function, which receives as its parameter a page descriptor pointer page, distinguishes two cases:

If the page frame is not in high memory (PG_highmem flag clear), the linear address always exists and is obtained by computing the page frame index, converting it into a physical address, and finally deriving the linear address corresponding to the physical address. This is accomplished by the following code:

_ _va((unsigned long)(page - mem_map) << 12)

If the page frame is in high memory (PG_highmem flag set), the function looks into the page_address_htable hash table. If the page frame is found in the hash table, page_address( ) returns its linear address, otherwise it returns NULL.

The kmap( ) function establishes a permanent kernel mapping. It is essentially equivalent to the following code:

void * kmap(struct page * page)

{

if (!PageHighMem(page))

return page_address(page);

return kmap_high(page);

}

The kmap_high( ) function is invoked if the page frame really belongs to high memory. The function is essentially equivalent to the following code:

void * kmap_high(struct page * page)

{

unsigned long vaddr;

spin_lock(&kmap_lock);

vaddr = (unsigned long) page_address(page);

if (!vaddr)

vaddr = map_new_virtual(page);

pkmap_count[(vaddr-PKMAP_BASE) >> PAGE_SHIFT]++;

spin_unlock(&kmap_lock);

return (void *) vaddr;

}

The function gets the kmap_lock spin lock to protect the Page Table against concurrent accesses in multiprocessor systems. Notice that there is no need to disable the interrupts, because kmap( ) cannot be invoked by interrupt handlers and deferrable functions. Next, the kmap_high( ) function checks whether the page frame is already mapped by invoking page_address( ). If not, the function invokes map_new_virtual( ) to insert the page frame physical address into an entry of pkmap_page_table and to add an element to the page_address_htable hash table. Then kmap_high( ) increases the counter corresponding to the linear address of the page frame to take into account the new kernel component that invoked this function. Finally, kmap_high( ) releases the kmap_lock spin lock and returns the linear address that maps the page frame.

The map_new_virtual( ) function essentially executes two nested loops:

for (;;) {

int count;

DECLARE_WAITQUEUE(wait, current);

for (count = LAST_PKMAP; count > 0; --count) {

last_pkmap_nr = (last_pkmap_nr + 1) & (LAST_PKMAP - 1);

if (!last_pkmap_nr) {

flush_all_zero_pkmaps( );

count = LAST_PKMAP;

}

if (!pkmap_count[last_pkmap_nr]) {

unsigned long vaddr = PKMAP_BASE +

(last_pkmap_nr << PAGE_SHIFT);

set_pte(&(pkmap_page_table[last_pkmap_nr]),

mk_pte(page, _ _pgprot(0x63)));

pkmap_count[last_pkmap_nr] = 1;

set_page_address(page, (void *) vaddr);

return vaddr;

}

}

current->state = TASK_UNINTERRUPTIBLE;

add_wait_queue(&pkmap_map_wait, &wait);

spin_unlock(&kmap_lock);

schedule( );

remove_wait_queue(&pkmap_map_wait, &wait);

spin_lock(&kmap_lock);

if (page_address(page))

return (unsigned long) page_address(page);

}

In the inner loop, the function scans all counters in pkmap_count until it finds a null value. The large if block runs when an unused entry is found in pkmap_count. That block determines the linear address corresponding to the entry, creates an entry for it in the pkmap_page_table Page Table, sets the count to 1 because the entry is now used, invokes set_page_address( ) to insert a new element in the page_address_htable hash table, and returns the linear address.

The function starts where it left off last time, cycling through the pkmap_count array. It does this by preserving in a variable named last_pkmap_nr the index of the last used entry in the pkmap_page_table Page Table. Thus, the search starts from where it was left in the last invocation of the map_new_virtual( ) function.

When the last counter in pkmap_count is reached, the search restarts from the counter at index 0. Before continuing, however, map_new_virtual( ) invokes the flush_all_zero_pkmaps( ) function, which starts another scan of the counters, looking for those that have the value 1. Each counter that has a value of 1 denotes an entry in pkmap_page_table that is free but cannot be used because the corresponding TLB entry has not yet been flushed. flush_all_zero_pkmaps( ) resets their counters to zero, deletes the corresponding elements from the page_address_htable hash table, and issues TLB flushes on all entries of pkmap_page_table.

If the inner loop cannot find a null counter in pkmap_count, the map_new_virtual( ) function blocks the current process until some other process releases an entry of the pkmap_page_table Page Table. This is achieved by inserting current in the pkmap_map_wait wait queue, setting the current state to TASK_UNINTERRUPTIBLE, and invoking schedule( ) to relinquish the CPU. Once the process is awakened, the function checks whether another process has mapped the page by invoking page_address( ); if no other process has mapped the page yet, the inner loop is restarted.

The kunmap( ) function destroys a permanent kernel mapping established previously by kmap( ). If the page is really in the high memory zone, it invokes the kunmap_high( ) function, which is essentially equivalent to the following code:

void kunmap_high(struct page * page)

{

spin_lock(&kmap_lock);

if ((--pkmap_count[((unsigned long)page_address(page)

-PKMAP_BASE)>>PAGE_SHIFT]) == 1)

if (waitqueue_active(&pkmap_map_wait))

wake_up(&pkmap_map_wait);

spin_unlock(&kmap_lock);

}

The expression within the brackets computes the index into the pkmap_count array from the page's linear address. The counter is decreased and compared to 1. A successful comparison indicates that no process is using the page. The function can finally wake up processes in the wait queue filled by map_new_virtual( ), if any.

8.1.6.2. Temporary kernel mappings

Temporary kernel mappings are simpler to implement than permanent kernel mappings; moreover, they can be used inside interrupt handlers and deferrable functions, because requesting a temporary kernel mapping never blocks the current process.

Every page frame in high memory can be mapped through a window in the kernel address spacenamely, a Page Table entry that is reserved for this purpose. The number of windows reserved for temporary kernel mappings

is quite small.

Each CPU has its own set of 13 windows, represented by the enum km_type data structure. Each symbol defined in this data structuresuch as KM_BOUNCE_READ, KM_USER0, or KM_PTE0identifies the linear address of a window.

The kernel must ensure that the same window is never used by two kernel control paths at the same time. Thus, each symbol in the km_type structure is dedicated to one kernel component and is named after the component. The last symbol, KM_TYPE_NR, does not represent a linear address by itself, but yields the number of different windows usable by every CPU.

Each symbol in km_type, except the last one, is an index of a fix-mapped linear address (see the section "Fix-Mapped Linear Addresses" in Chapter 2). The enum fixed_addresses data structure includes the symbols FIX_KMAP_BEGIN and FIX_KMAP_END; the latter is assigned to the index FIX_KMAP_BEGIN + (KM_TYPE_NR * NR_CPUS) - 1. In this manner, there are KM_TYPE_NR fix-mapped linear addresses

for each CPU in the system. Furthermore, the kernel initializes the kmap_pte variable with the address of the Page Table entry corresponding to the fix_to_virt(FIX_KMAP_BEGIN) linear address.

To establish a temporary kernel mapping, the kernel invokes the kmap_atomic( ) function, which is essentially equivalent to the following code:

void * kmap_atomic(struct page * page, enum km_type type)

{

enum fixed_addresses idx;

unsigned long vaddr;

current_thread_info( )->preempt_count++;

if (!PageHighMem(page))

return page_address(page);

idx = type + KM_TYPE_NR * smp_processor_id( );

vaddr = fix_to_virt(FIX_KMAP_BEGIN + idx);

set_pte(kmap_pte-idx, mk_pte(page, 0x063));

_ _flush_tlb_single(vaddr);

return (void *) vaddr;

}

The type argument and the CPU identifier retrieved through smp_processor_id( ) specify what fix-mapped linear address has to be used to map the request page. The function returns the linear address of the page frame if it doesn't belong to high memory; otherwise, it sets up the Page Table entry corresponding to the fix-mapped linear address with the page's physical address and the bits Present, Accessed, Read/Write, and Dirty. Finally, the function flushes the proper TLB entry and returns the linear address.

To destroy a temporary kernel mapping, the kernel uses the kunmap_atomic( ) function. In the 80 x 86 architecture, this function decreases the preempt_count of the current process; thus, if the kernel control path was preemptable right before requiring a temporary kernel mapping, it will be preemptable again after it has destroyed the same mapping. Moreover, kunmap_atomic( ) checks whether the TIF_NEED_RESCHED flag of current is set and, if so, invokes schedule( ).

8.1.7. The Buddy System Algorithm

The kernel must establish a robust and efficient strategy for allocating groups of contiguous page frames. In doing so, it must deal with a well-known memory management problem called external fragmentation: frequent requests and releases of groups of contiguous page frames of different sizes may lead to a situation in which several small blocks of free page frames are "scattered" inside blocks of allocated page frames. As a result, it may become impossible to allocate a large block of contiguous page frames, even if there are enough free pages to satisfy the request.

There are essentially two ways to avoid external fragmentation:

Use the paging circuitry to map groups of noncontiguous free page frames into intervals of contiguous linear addresses. Develop a suitable technique to keep track of the existing blocks of free contiguous page frames, avoiding as much as possible the need to split up a large free block to satisfy a request for a smaller one.

The second approach is preferred by the kernel for three good reasons:

In some cases, contiguous page frames are really necessary, because contiguous linear addresses are not sufficient to satisfy the request. A typical example is a memory request for buffers to be assigned to a DMA processor (see Chapter 13). Because most DMAs ignore the paging circuitry and access the address bus directly while transferring several disk sectors in a single I/O operation, the buffers requested must be located in contiguous page frames. Even if contiguous page frame allocation is not strictly necessary, it offers the big advantage of leaving the kernel paging tables unchanged. What's wrong with modifying the Page Tables? As we know from Chapter 2, frequent Page Table modifications lead to higher average memory access times, because they make the CPU flush the contents of the translation lookaside buffers. Large chunks of contiguous physical memory can be accessed by the kernel through 4 MB pages. This reduces the translation lookaside buffers misses, thus significantly speeding up the average memory access time (see the section "Translation Lookaside Buffers (TLB)" in Chapter 2).

The technique adopted by Linux to solve the external fragmentation problem is based on the well-known buddy system algorithm. All free page frames are grouped into 11 lists of blocks that contain groups of 1, 2, 4, 8, 16, 32, 64, 128, 256, 512, and 1024 contiguous page frames, respectively. The largest request of 1024 page frames corresponds to a chunk of 4 MB of contiguous RAM. The physical address of the first page frame of a block is a multiple of the group sizefor example, the initial address of a 16-page-frame block is a multiple of 16 x 212 (212 = 4,096, which is the regular page size).

We'll show how the algorithm works through a simple example:

Assume there is a request for a group of 256 contiguous page frames (i.e., one megabyte). The algorithm checks first to see whether a free block in the 256-page-frame list exists. If there is no such block, the algorithm looks for the next larger blocka free block in the 512-page-frame list. If such a block exists, the kernel allocates 256 of the 512 page frames to satisfy the request and inserts the remaining 256 page frames into the list of free 256-page-frame blocks. If there is no free 512-page block, the kernel then looks for the next larger block (i.e., a free 1024-page-frame block). If such a block exists, it allocates 256 of the 1024 page frames to satisfy the request, inserts the first 512 of the remaining 768 page frames into the list of free 512-page-frame blocks, and inserts the last 256 page frames into the list of free 256-page-frame blocks. If the list of 1024-page-frame blocks is empty, the algorithm gives up and signals an error condition.

The reverse operation, releasing blocks of page frames, gives rise to the name of this algorithm. The kernel attempts to merge pairs of free buddy blocks of size b together into a single block of size 2b. Two blocks are considered buddies if:

Both blocks have the same size, say b. They are located in contiguous physical addresses. The physical address of the first page frame of the first block is a multiple of 2 x b x 212.

The algorithm is iterative; if it succeeds in merging released blocks, it doubles b and tries again so as to create even bigger blocks.

8.1.7.1. Data structures

Linux 2.6 uses a different buddy system for each zone. Thus, in the 80 x 86 architecture, there are 3 buddy systems: the first handles the page frames suitable for ISA DMA, the second handles the "normal" page frames, and the third handles the high-memory page frames. Each buddy system relies on the following main data structures

:

The mem_map array introduced previously. Actually, each zone is concerned with a subset of the mem_map elements. The first element in the subset and its number of elements are specified, respectively, by the zone_mem_map and size fields of the zone descriptor. An array consisting of eleven elements of type free_area, one element for each group size. The array is stored in the free_area field of the zone descriptor.

Let us consider the kth element of the free_area array in the zone descriptor, which identifies all the free blocks of size 2k. The free_list field of this element is the head of a doubly linked circular list that collects the page descriptors associated with the free blocks of 2k pages. More precisely, this list includes the page descriptors of the starting page frame of every block of 2k free page frames; the pointers to the adjacent elements in the list are stored in the lru field of the page descriptor.

Besides the head of the list, the kth element of the free_area array includes also the field nr_free, which specifies the number of free blocks of size 2k pages. Of course, if there are no blocks of 2k free page frames, nr_free is equal to 0 and the free_list list is empty (both pointers of free_list point to the free_list field itself).

Finally, the private field of the descriptor of the first page in a block of 2k free pages stores the order of the block, that is, the number k. Thanks to this field, when a block of pages is freed, the kernel can determine whether the buddy of the block is also free and, if so, it can coalesce the two blocks in a single block of 2k+1 pages. It should be noted that up to Linux 2.6.10, the kernel used 10 arrays of flags to encode this information.

8.1.7.2. Allocating a block

The _ _rmqueue( ) function is used to find a free block in a zone. The function takes two arguments: the address of the zone descriptor, and order, which denotes the logarithm of the size of the requested block of free pages (0 for a one-page block, 1 for a two-page block, and so forth). If the page frames are successfully allocated, the _ _rmqueue( ) function returns the address of the page descriptor of the first allocated page frame. Otherwise, the function returns NULL.

The _ _rmqueue( ) function assumes that the caller has already disabled local interrupts and acquired the zone->lock spin lock, which protects the data structures of the buddy system. It performs a cyclic search through each list for an available block (denoted by an entry that doesn't point to the entry itself), starting with the list for the requested order and continuing if necessary to larger orders:

struct free_area *area;

unsigned int current_order;

for (current_order=order; current_order<11; ++current_order) {

area = zone->free_area + current_order;

if (!list_empty(&area->free_list))

goto block_found;

}

return NULL;

If the loop terminates, no suitable free block has been found, so _ _rmqueue( ) returns a NULL value. Otherwise, a suitable free block has been found; in this case, the descriptor of its first page frame is removed from the list and the value of free_ pages in the zone descriptor is decreased:

block_found:

page = list_entry(area->free_list.next, struct page, lru);

list_del(&page->lru);

ClearPagePrivate(page);

page->private = 0;

area->nr_free--;

zone->free_pages -= 1UL << order;

If the block found comes from a list of size curr_order greater than the requested size order, a while cycle is executed. The rationale behind these lines of codes is as follows: when it becomes necessary to use a block of 2k page frames to satisfy a request for 2h page frames (h < k), the program allocates the first 2h page frames and iteratively reassigns the last 2k - 2h page frames to the free_area lists that have indexes between h and k:

size = 1 << curr_order;

while (curr_order > order) {

area--;

curr_order--;

size >>= 1;

buddy = page + size;

/* insert buddy as first element in the list */

list_add(&buddy->lru, &area->free_list);

area->nr_free++;

buddy->private = curr_order;

SetPagePrivate(buddy);

}

return page;

Because the _ _rmqueue( ) function has found a suitable free block, it returns the address page of the page descriptor associated with the first allocated page frame.

8.1.7.3. Freeing a block

The _ _free_pages_bulk( ) function implements the buddy system strategy for freeing page frames. It uses three basic input parameters:

page The address of the descriptor of the first page frame included in the block to be released

zone The address of the zone descriptor

order The logarithmic size of the block

The function assumes that the caller has already disabled local interrupts and acquired the zone->lock spin lock, which protects the data structure of the buddy system. _ _free_pages_bulk( ) starts by declaring and initializing a few local variables:

struct page * base = zone->zone_mem_map;

unsigned long buddy_idx, page_idx = page - base;

struct page * buddy, * coalesced;

int order_size = 1 << order;

The page_idx local variable contains the index of the first page frame in the block with respect to the first page frame of the zone.

The order_size local variable is used to increase the counter of free page frames in the zone:

zone->free_pages += order_size;

The function now performs a cycle executed at most 10- order times, once for each possibility for merging a block with its buddy. The function starts with the smallest-sized block and moves up to the top size:

while (order < 10) {

buddy_idx = page_idx ^ (1 << order);

buddy = base + buddy_idx;

if (!page_is_buddy(buddy, order))

break;

list_del(&buddy->lru);

zone->free_area[order].nr_free--;

ClearPagePrivate(buddy);

buddy->private = 0;

page_idx &= buddy_idx;

order++;

}

In the body of the loop, the function looks for the index buddy_idx of the block, which is buddy to the one having the page descriptor index page_idx. It turns out that this index can be easily computed as:

buddy_idx = page_idx ^ (1 << order);

In fact, an Exclusive OR (XOR) using the (1<<order) mask switches the value of the order-th bit of page_idx. Therefore, if the bit was previously zero, buddy_idx is equal to page_idx+ order_size; conversely, if the bit was previously one, buddy_idx is equal to page_idx - order_size.

Once the buddy block index is known, the page descriptor of the buddy block can be easily obtained as:

buddy = base + buddy_idx;

Now the function invokes page_is_buddy() to check if buddy describes the first page of a block of order_size free page frames.

int page_is_buddy(struct page *page, int order)

{

if (PagePrivate(buddy) && page->private == order &&

!PageReserved(buddy) && page_count(page) ==0)

return 1;

return 0;

}

As you see, the buddy's first page must be free ( _count field equal to -1), it must belong to the dynamic memory (PG_reserved bit clear), its private field must be meaningful (PG_private bit set), and finally the private field must store the order of the block being freed.

If all these conditions are met, the buddy block is free and the function removes the buddy block from the list of free blocks of order order, and performs one more iteration looking for buddy blocks twice as big.

If at least one of the conditions in page_is_buddy( ) is not met, the function breaks out of the cycle, because the free block obtained cannot be merged further with other free blocks. The function inserts it in the proper list and updates the private field of the first page frame with the order of the block size:

coalesced = base + page_idx;

coalesced->private = order;

SetPagePrivate(coalesced);

list_add(&coalesced->lru, &zone->free_area[order].free_list);

zone->free_area[order].nr_free++;

8.1.8. The Per-CPU Page Frame Cache

As we will see later in this chapter, the kernel often requests and releases single page frames. To boost system performance, each memory zone defines a per-CPU page frame cache. Each per-CPU cache includes some pre-allocated page frames to be used for single memory requests issued by the local CPU.

Actually, there are two caches for each memory zone and for each CPU: a hot cache

, which stores page frames whose contents are likely to be included in the CPU's hardware cache, and a cold cache

.

Taking a page frame from the hot cache is beneficial for system performance if either the kernel or a User Mode process will write into the page frame right after the allocation. In fact, every access to a memory cell of the page frame will result in a line of the hardware cache being "stolen" from another page frameunless, of course, the hardware cache already includes a line that maps the cell of the "hot" page frame just accessed.

Conversely, taking a page frame from the cold cache is convenient if the page frame is going to be filled with a DMA operation. In this case, the CPU is not involved and no line of the hardware cache will be modified. Taking the page frame from the cold cache preserves the reserve of hot page frames for the other kinds of memory allocation requests.

The main data structure implementing the per-CPU page frame cache is an array of per_cpu_pageset data structures stored in the pageset field of the memory zone descriptor. The array includes one element for each CPU; this element, in turn, consists of two per_cpu_pages descriptors, one for the hot cache and the other for the cold cache. The fields of the per_cpu_pages descriptor are listed in Table 8-7.

Table 8-7. The fields of the per_cpu_pages descriptorType | Name | Description |

|---|

int | count | Number of pages frame in the cache | int | low | Low watermark for cache replenishing | int | high | High watermark for cache depletion | int | batch | Number of page frames to be added or subtracted from the cache | struct list_head | list | List of descriptors of the page frames included in the cache |

The kernel monitors the size of the both the hot and cold caches by using two watermarks: if the number of page frames falls below the low watermark, the kernel replenishes the proper cache by allocating batch single page frames from the buddy system; otherwise, if the number of page frames rises above the high watermark, the kernel releases to the buddy system batch page frames in the cache. The values of batch, low, and high essentially depend on the number of page frames included in the memory zone.

8.1.8.1. Allocating page frames through the per-CPU page frame caches

The buffered_rmqueue( ) function allocates page frames in a given memory zone. It makes use of the per-CPU page frame caches to handle single page frame requests.

The parameters are the address of the memory zone descriptor, the order of the memory allocation request order, and the allocation flags gfp_flags. If the _ _GFP_COLD flag is set in gfp_flags, the page frame should be taken from the cold cache, otherwise it should be taken from the hot cache (this flag is meaningful only for single page frame requests). The function essentially executes the following operations:

If order is not equal to 0, the per-CPU page frame cache cannot be used: the function jumps to step 4. Checks whether the memory zone's local per-CPU cache identified by the value of the _ _GFP_COLD flag has to be replenished (the count field of the per_cpu_pages descriptor is lower than or equal to the low field). In this case, it executes the following substeps: Allocates batch single page frames from the buddy system by repeatedly invoking the _ _rmqueue( ) function. Inserts the descriptors of the allocated page frames in the cache's list. Updates the value of count by adding the number of page frames actually allocated.

If count is positive, the function gets a page frame from the cache's list, decreases count, and jumps to step 5. (Observe that a per-CPU page frame cache could be empty; this happens when the _ _rmqueue( ) function invoked in step 2a fails to allocate any page frames.) Here, the memory request has not yet been satisfied, either because the request spans several contiguous page frames, or because the selected page frame cache is empty. Invokes the _ _rmqueue( ) function to allocate the requested page frames from the buddy system. If the memory request has been satisfied, the function initializes the page descriptor of the (first) page frame: clears some flags, sets the private field to zero, and sets the page frame reference counter to one. Moreover, if the _ _GPF_ZERO flag in gfp_flags is set, it fills the allocated memory area with zeros. Returns the page descriptor address of the (first) page frame, or NULL if the memory allocation request failed.

8.1.8.2. Releasing page frames to the per-CPU page frame caches

In order to release a single page frame to a per-CPU page frame cache, the kernel makes use of the free_hot_page( ) and free_cold_page( ) functions. Both of them are simple wrappers for the free_hot_cold_page( ) function, which receives as its parameters the descriptor address page of the page frame to be released and a cold flag specifying either the hot cache or the cold cache.

The free_hot_cold_page( ) function executes the following operations:

Gets from the page->flags field the address of the memory zone descriptor including the page frame (see the earlier section "Non-Uniform Memory Access (NUMA)"). Gets the address of the per_cpu_pages descriptor of the zone's cache selected by the cold flag. Checks whether the cache should be depleted: if count is higher than or equal to high, invokes the free_pages_bulk( ) function, passing to it the zone descriptor, the number of page frames to be released (batch field), the address of the cache's list, and the number zero (for 0-order page frames). In turn, the latter function invokes repeatedly the _ _free_pages_bulk( ) function to releases the specified number of page framestaken from the cache's listto the buddy system of the memory zone. Adds the page frame to be released to the cache's list, and increases the count field.

It should be noted that in the current version of the Linux 2.6 kernel, no page frame is ever released to the cold cache: the kernel always assumes the freed page frame is hot with respect to the hardware cache. Of course, this does not mean that the cold cache is empty: the cache is replenished by buffered_rmqueue( ) when the low watermark has been reached.

8.1.9. The Zone Allocator

The zone allocator

is the frontend of the kernel page frame allocator. This component must locate a memory zone that includes a number of free page frames large enough to satisfy the memory request. This task is not as simple as it could appear at a first glance, because the zone allocator must satisfy several goals:

It should protect the pool of reserved page frames (see the earlier section "The Pool of Reserved Page Frames"). It should trigger the page frame reclaiming algorithm (see Chapter 17) when memory is scarce and blocking the current process is allowed; once some page frames have been freed, the zone allocator will retry the allocation. It should preserve the small, precious ZONE_DMA memory zone, if possible. For instance, the zone allocator should be somewhat reluctant to assign page frames in the ZONE_DMA memory zone if the request was for ZONE_NORMAL or ZONE_HIGHMEM page frames.

We have seen in the earlier section "The Zoned Page Frame Allocator" that every request for a group of contiguous page frames is eventually handled by executing the alloc_pages macro. This macro, in turn, ends up invoking the _ _alloc_pages( ) function, which is the core of the zone allocator. It receives three parameters:

gfp_mask The flags specified in the memory allocation request (see earlier Table 8-5)

order The logarithmic size of the group of contiguous page frames to be allocated

zonelist Pointer to a zonelist data structure describing, in order of preference, the memory zones suitable for the memory allocation

The _ _alloc_pages( ) function scans every memory zone included in the zonelist data structure. The code that does this looks like the following:

for (i = 0; (z=zonelist->zones[i]) != NULL; i++) {

if (zone_watermark_ok(z, order, ...)) {

page = buffered_rmqueue(z, order, gfp_mask);

if (page)

return page;

}

}

For each memory zone, the function compares the number of free page frames with a threshold value that depends on the memory allocation flags, on the type of current process, and on how many times the zone has already been checked by the function. In fact, if free memory is scarce, every memory zone is typically scanned several times, each time with lower threshold on the minimal amount of free memory required for the allocation. The previous block of code is thus replicated several timeswith minor variationsin the body of the _ _alloc_pages( ) function. The buffered_rmqueue( ) function has been described already in the earlier section "The Per-CPU Page Frame Cache:" it returns the page descriptor of the first allocated page frame, or NULL if the memory zone does not include a group of contiguous page frames of the requested size.

The zone_watermark_ok( ) auxiliary function receives several parameters, which determine a threshold min on the number of free page frames in the memory zone. In particular, the function returns the value 1 if the following two conditions are met:

Besides the page frames to be allocated, there are at least min free page frames in the memory zone, not including the page frames in the low-on-memory reserve (lowmem_reserve field of the zone descriptor). Besides the page frames to be allocated, there are at least  free page frames in blocks of order at least k, for each k between 1 and the order of the allocation. Therefore, if order is greater than zero, there must be at least min/2 free page frames in blocks of size at least 2; if order is greater than one, there must be at least min/4 free page frames in blocks of size at least 4; and so on. free page frames in blocks of order at least k, for each k between 1 and the order of the allocation. Therefore, if order is greater than zero, there must be at least min/2 free page frames in blocks of size at least 2; if order is greater than one, there must be at least min/4 free page frames in blocks of size at least 4; and so on.

The value of the threshold min is determined by zone_watermark_ok( ) as follows:

The base value is passed as a parameter of the function and can be one of the pages_min, pages_low, and pages_high zone's watermarks (see the section "The Pool of Reserved Page Frames" earlier in this chapter). The base value is divided by two if the gfp_high flag passed as parameter is set. Usually, this flag is equal to one if the _ _GFP_HIGHMEM flag is set in the gfp_mask, that is, if the page frames can be allocated from high memory. The threshold value is further reduced by one-fourth if the can_try_harder flag passed as parameter is set. This flag is usually equal to one if either the _ _GFP_WAIT flag is set in gfp_mask, or if the current process is a real-time process and the memory allocation is done in process context (outside of interrupt handlers and deferrable functions).

The _ _alloc_pages( ) function essentially executes the following steps:

Performs a first scanning of the memory zones (see the block of code shown earlier). In this first scan, the min threshold value is set to z->pages_low, where z points to the zone descriptor being analyzed (the can_try_harder and gfp_high parameters are set to zero). If the function did not terminate in the previous step, there is not much free memory left: the function awakens the kswapd

kernel threads to start reclaiming page frames asynchronously (see Chapter 17). Performs a second scanning of the memory zones, passing as base threshold the value z->pages_min. As explained previously, the actual threshold is determined also by the can_try_harder and gfp_high flags. This step is nearly identical to step 1, except that the function is using a lower threshold. If the function did not terminate in the previous step, the system is definitely low on memory. If the kernel control path that issued the memory allocation request is not an interrupt handler or a deferrable function and it is trying to reclaim page frames (either the PF_MEMALLOC flag or the PF_MEMDIE flag of current is set), the function then performs a third scanning of the memory zones, trying to allocate the page frames ignoring the low-on-memory thresholdsthat is, without invoking zone_watermark_ok( ). This is the only case where the kernel control path is allowed to deplete the low-on-memory reserve of pages specified by the lowmem_reserve field of the zone descriptor. In fact, in this case the kernel control path that issued the memory request is ultimately trying to free page frames, thus it should get what it has requested, if at all possible. If no memory zone includes enough page frames, the function returns NULL to notify the caller of the failure. Here, the invoking kernel control path is not trying to reclaim memory. If the _ _GFP_WAIT flag of gfp_mask is not set, the function returns NULL to notify the kernel control path of the memory allocation failure: in this case, there is no way to satisfy the request without blocking the current process. Here the current process can be blocked: invokes cond_resched() to check whether some other process needs the CPU. Sets the PF_MEMALLOC flag of current, to denote the fact that the process is ready to perform memory reclaiming. Invokes TRy_to_free_pages( ) to look for some page frames to be reclaimed (see the section "Low On Memory Reclaiming" in Chapter 17). The latter function may block the current process. Once that function returns, _ _alloc_pages( ) resets the PF_MEMALLOC flag of current and invokes once more cond_resched(). If the previous step has freed some page frames, the function performs yet another scanning of the memory zones equal to the one performed in step 3. If the memory allocation request cannot be satisfied, the function determines whether it should continue scanning the memory zone: if the _ _GFP_NORETRY flag is clear and either the memory allocation request spans up to eight page frames, or one of the _ _GFP_REPEAT and _ _GFP_NOFAIL flags is set, the function invokes blk_congestion_wait( ) to put the process asleep for awhile (see Chapter 14), and it jumps back to step 6. Otherwise, the function returns NULL to notify the caller that the memory allocation failed. If no page frame has been freed in step 9, the kernel is in deep trouble, because free memory is dangerously low and it was not possible to reclaim any page frame. Perhaps the time has come to take a crucial decision. If the kernel control path is allowed to perform the filesystem-dependent operations needed to kill a process (the _ _GFP_FS flag in gfp_mask is set) and the _ _GFP_NORETRY flag is clear, performs the following substeps: Scans once again the memory zones with a threshold value equal to z->pages_high.

Because the watermark used in step 11a is much higher than the watermarks used in the previous scannings, that step is likely to fail. Actually, step 11a succeeds only if another kernel control path is already killing a process to reclaim its memory. Thus, step 11a avoids that two innocent processes are killed instead of one.

8.1.9.1. Releasing a group of page frames

The zone allocator also takes care of releasing page frames; thankfully, releasing memory is a lot easier than allocating it.

All kernel macros and functions that release page framesdescribed in the earlier section "The Zoned Page Frame Allocator"rely on the _ _free_pages( ) function. It receives as its parameters the address of the page descriptor of the first page frame to be released (page), and the logarithmic size of the group of contiguous page frames to be released (order). The function executes the following steps:

Checks that the first page frame really belongs to dynamic memory (its PG_reserved flag is cleared); if not, terminates. Decreases the page->_count usage counter; if it is still greater than or equal to zero, terminates. If order is equal to zero, the function invokes free_hot_page( ) to release the page frame to the per-CPU hot cache of the proper memory zone (see the earlier section "The Per-CPU Page Frame Cache"). If order is greater than zero, it adds the page frames in a local list and invokes the free_pages_bulk( ) function to release them to the buddy system of the proper memory zone (see step 3 in the description of free_hot_cold_page( ) in the earlier section "The Per-CPU Page Frame Cache").

|

8.2. Memory Area Management

This section deals with memory areas

that is, with sequences of memory cells having contiguous physical addresses and an arbitrary length.

The buddy system algorithm adopts the page frame as the basic memory area. This is fine for dealing with relatively large memory requests, but how are we going to deal with requests for small memory areas, say a few tens or hundreds of bytes?

Clearly, it would be quite wasteful to allocate a full page frame to store a few bytes. A better approach instead consists of introducing new data structures that describe how small memory areas are allocated within the same page frame. In doing so, we introduce a new problem called internal fragmentation. It is caused by a mismatch between the size of the memory request and the size of the memory area allocated to satisfy the request.

A classical solution (adopted by early Linux versions) consists of providing memory areas whose sizes are geometrically distributed; in other words, the size depends on a power of 2 rather than on the size of the data to be stored. In this way, no matter what the memory request size is, we can ensure that the internal fragmentation is always smaller than 50 percent. Following this approach, the kernel creates 13 geometrically distributed lists of free memory areas whose sizes range from 32 to 131, 072 bytes. The buddy system is invoked both to obtain additional page frames needed to store new memory areas and, conversely, to release page frames that no longer contain memory areas. A dynamic list is used to keep track of the free memory areas contained in each page frame.

8.2.1. The Slab Allocator

Running a memory area allocation algorithm on top of the buddy algorithm is not particularly efficient. A better algorithm is derived from the slab allocator

schema that was adopted for the first time in the Sun Microsystems Solaris

2.4 operating system. It is based on the following premises:

The type of data to be stored may affect how memory areas are allocated; for instance, when allocating a page frame to a User Mode process, the kernel invokes the get_zeroed_page( ) function, which fills the page with zeros. The concept of a slab allocator expands upon this idea and views the memory areas as objects consisting of both a set of data structures and a couple of functions or methods called the constructor and destructor. The former initializes the memory area while the latter deinitializes it. To avoid initializing objects repeatedly, the slab allocator does not discard the objects that have been allocated and then released but instead saves them in memory. When a new object is then requested, it can be taken from memory without having to be reinitialized. The kernel functions tend to request memory areas of the same type repeatedly. For instance, whenever the kernel creates a new process, it allocates memory areas for some fixed size tables such as the process descriptor, the open file object, and so on (see Chapter 3). When a process terminates, the memory areas used to contain these tables can be reused. Because processes are created and destroyed quite frequently, without the slab allocator, the kernel wastes time allocating and deallocating the page frames containing the same memory areas repeatedly; the slab allocator allows them to be saved in a cache and reused quickly. Requests for memory areas can be classified according to their frequency. Requests of a particular size that are expected to occur frequently can be handled most efficiently by creating a set of special-purpose objects that have the right size, thus avoiding internal fragmentation. Meanwhile, sizes that are rarely encountered can be handled through an allocation scheme based on objects in a series of geometrically distributed sizes (such as the power-of-2 sizes used in early Linux versions), even if this approach leads to internal fragmentation. There is another subtle bonus in introducing objects whose sizes are not geometrically distributed: the initial addresses of the data structures are less prone to be concentrated on physical addresses whose values are a power of 2. This, in turn, leads to better performance by the processor hardware cache. Hardware cache performance creates an additional reason for limiting calls to the buddy system allocator as much as possible. Every call to a buddy system function "dirties" the hardware cache, thus increasing the average memory access time. The impact of a kernel function on the hardware cache is called the function footprint; it is defined as the percentage of cache overwritten by the function when it terminates. Clearly, large footprints lead to a slower execution of the code executed right after the kernel function, because the hardware cache is by now filled with useless information.

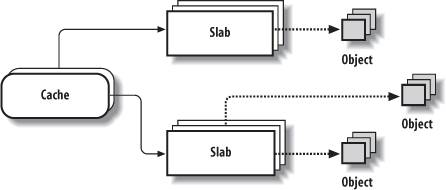

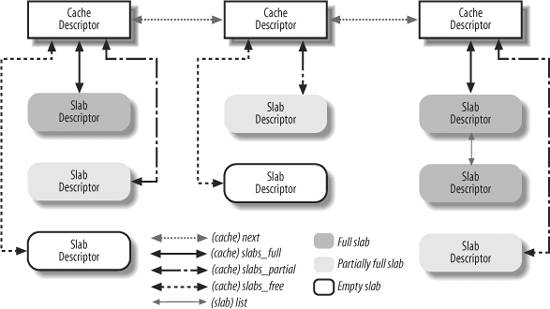

The slab allocator groups objects into caches

. Each cache is a "store" of objects of the same type. For instance, when a file is opened, the memory area needed to store the corresponding "open file" object is taken from a slab allocator cache named filp (for "file pointer").

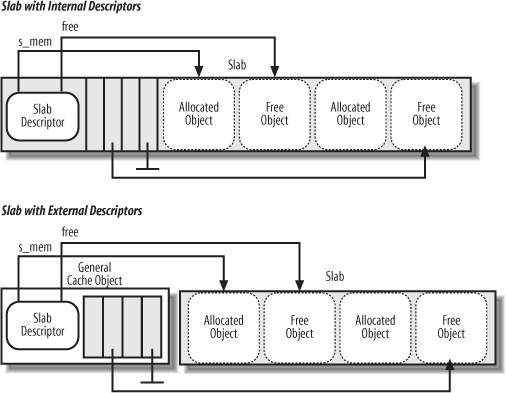

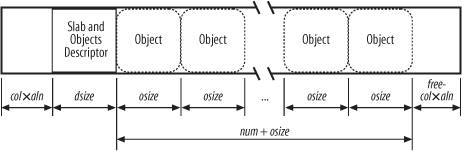

The area of main memory that contains a cache is divided into slabs

; each slab consists of one or more contiguous page frames that contain both allocated and free objects (see Figure 8-3).

As we'll see in Chapter 17, the kernel periodically scans the caches and releases the page frames corresponding to empty slabs.

8.2.2. Cache Descriptor

Each cache is described by a structure of type kmem_cache_t (which is equivalent to the type struct kmem_cache_s), whose fields are listed in Table 8-8. We omitted from the table several fields used for collecting statistical information and for debugging.

Table 8-8. The fields of the kmem_cache_t descriptorType | Name | Description |

|---|

struct array_cache * [] | array | Per-CPU array of pointers to local caches of free objects (see the section "Local Caches of Free Slab Objects" later in this chapter). | unsigned int | batchcount | Number of objects to be transferred in bulk to or from the local caches. | unsigned int | limit | Maximum number of free objects in the local caches. This is tunable. | struct kmem_list3 | lists | See next table. | unsigned int | objsize | Size of the objects included in the cache. | unsigned int | flags | Set of flags that describes permanent properties of the cache. | unsigned int | num | Number of objects packed into a single slab. (All slabs of the cache have the same size.) | unsigned int | free_limit | Upper limit of free objects in the whole slab cache. | spinlock_t | spinlock | Cache spin lock. | unsigned int | gfporder | Logarithm of the number of contiguous page frames included in a single slab. | unsigned int | gfpflags | Set of flags passed to the buddy system function when allocating page frames. | size_t | colour | Number of colors for the slabs (see the section "Slab Coloring" later in this chapter). | unsigned int | colour_off | Basic alignment offset in the slabs. | unsigned int | colour_next | Color to use for the next allocated slab. | kmem_cache_t * | slabp_cache | Pointer to the general slab cache containing the slab descriptors (NULL if internal slab descriptors are used; see next section). | unsigned int | slab_size | The size of a single slab. | unsigned int | dflags | Set of flags that describe dynamic properties of the cache. | void * | ctor | Pointer to constructor method associated with the cache. | void * | dtor | Pointer to destructor method associated with the cache. | const char * | name | Character array storing the name of the cache. | struct list_head | next | Pointers for the doubly linked list of cache descriptors. |

The lists field of the kmem_cache_t descriptor, in turn, is a structure whose fields are listed in Table 8-9.

Table 8-9. The fields of the kmem_list3 structureType | Name | Description |

|---|