Configuration

Before describing the various configuration interfaces, I should point out that it is highly recommended to run defconfig before doing anything else. I describe exactly why later in this section, but, for now, suffice it to say that doing so will give you a UML configuration that is much more likely to boot and run.

There are a variety of kernel configuration interfaces, ranging from the almost completely hands-off oldconfig to the graphical and fairly user-friendly xconfig. Here are the major choices.

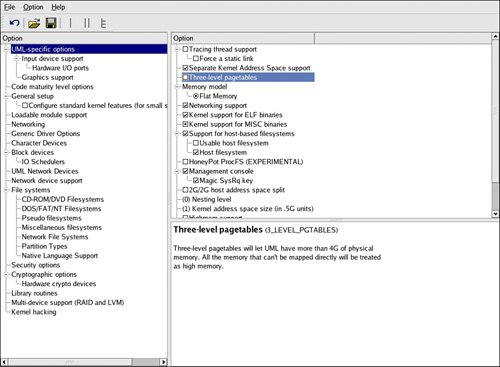

Clicking repeatedly on an option causes it to cycle through its possible settings. Normally, these choices are Enable versus Disable or Enable versus Modular versus Disable. Enable means that the option is built into the final kernel binary, Disable means that it's simply turned off, and Modular means that the option is compiled into a kernel module that can be inserted into the kernel at some later point. Some options have numeric or string values. Double-clicking on these opens a little pane in which you can type a new value. The main menu for the UML configuration is shown in Figure 11.1.

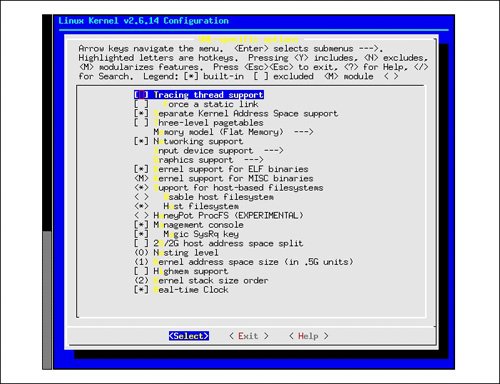

menuconfig presents the same menu organization as text in your terminal window. Navigation is done by using the up and down arrow keys and by typing the highlighted letters as shortcuts. The Tab key cycles around the Select, Exit, and Help buttons at the bottom of the window. Choosing Select enters a submenuExit leaves it and returns to the parent menu. Help displays the help for the current option. Hitting the spacebar will cycle through the settings for the current option. Empty brackets next to an option mean that it is disabled. An asterisk in the brackets means that it is enabled, and an "M" means that it is a module. When you are done choosing options, you select the Exit button repeatedly until you exit the top-level menu and are asked whether to keep this configuration or discard it. Figure 11.2 shows menuconfig running in an xterm window displaying the main UML-specific configuration menu.

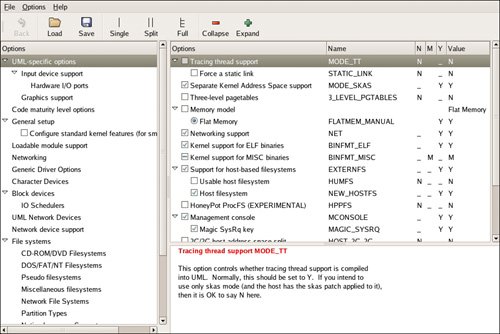

config is the simplest of the interactive configuration options. It asks you about every configuration option, one at a time. On a native x86 kernel, this is good for a soul-deadening afternoon. For UML, it's not nearly as bad, but this is still not the configuration method of choice. Some lesser-known configuration choices are useful in some situations. gconfig is a graphical configurator that's similar to xconfig. It's based on the GTK toolkit (which underlies the GNOME desktop environment) rather than the QT toolkit (which underlies the KDE desktop environment) as xconfig is. gconfig's behavior is nearly the same as xconfig's with the exception that checkboxes invite you to click on them, but they do nothing when you do. Instead, there are N, M, and Y columns on the right, as shown in Figure 11.3, which you can click in order to set options.

oldconfig is one of the mostly noninteractive configurators. It gives you a default configuration, with values taken from .config in the kernel tree if it's there, from the host's own configuration, or from the architecture's default configuration when all else fails. It does ask about options for which it does not have defaults. randconfig provides a random configuration. This is used to test the kernel build rather than to produce a useful kernel. defconfig provides a default configuration, using the defaults provided by the architecture. allmodconfig provides a configuration in which everything that can be configured as a module is. This is used either to get the smallest possible kernel binary for a given configuration or to test the kernel build procedure. allnoconfig provides a configuration with everything possible disabled. This is also used for kernel build testing rather than for producing useful kernels.

One important thing to remember throughout this process is that any time you run make in a UML pool, it is essential to put ARCH=um on the command line. This is because UML is a different architecture from the host, just like PowerPC (ppc) is a different architecture from PC (i386). UML's architecture name in the kernel pool is um. I dropped the l from uml because I considered it redundant in a Linux kernel pool.

Because the kernel build procedure will build a kernel for the machine on which it's running unless it's told otherwise, we need to tell it otherwise. This is the purpose of the ARCH=um switchit tells the kernel build procedure to build the um architecture, which is UML, rather than the host architecture, which is likely i386.

If you forget to add the ARCH=um switch at any point, as all of us do once in a while, the tree is likely to be polluted with host architecture data. I clean it up like this:

host% make mrproper

host% make mrproper ARCH=um

This does a full clean of both UML and the host architectures so that everything that was changed is cleaned up. Then, restart by redoing the configuration.

So, with that in mind, you can configure the UML kernel with something as simple as this:

host% make defconfig ARCH=um

This will give you the default configuration, which is recommended if this is the first time you are building UML. In this case, feel free to skip over to where we build UML. Otherwise, I'm going to talk about a number of UML-specific configuration options that are useful to know.

I recommend starting with defconfig before fine-tuning the UML configuration with another configurator. This is because, when starting with a clean tree, the other configurators look for a default configuration to start with. Unfortunately, they look in /boot and find the configuration for the host kernel, which is entirely unsuitable for UML. Using a configuration that started like this is likely to give you a UML that is missing many essential drivers and won't boot. Running defconfig as the first step, before anything else, will start you off with the default UML configuration. This configuration will boot, and the configuration you end up with after customizing it will likely boot as well. If it doesn't, you know what configuration changes you made and which are likely to have caused the problem.

Useful Configuration Options

Execution Mode-Specific Options

A number of configuration options are related to UML's execution mode. These are largely associated with tt mode, which may not exist by the time you read this. But it may still be present, and you may have to deal with a version of UML that has it.

MODE_TT and MODE_SKAS are the main options controlling UML's execution mode. They decide whether support for tt and skas modes, respectively, are compiled into the UML kernel. skas0 mode is part of MODE_SKAS. With MODE_TT disabled, tt mode is not available. Similarly, with MODE_SKAS disabled, skas3 and skas0 modes are not available. If you know you don't need one or the other, disabling an option will result in a somewhat smaller UML kernel. Having both enabled will produce a UML binary that tests the host's capabilities at runtime and chooses its execution mode accordingly. Given a UML with skas0 support, MODE_TT really isn't needed since skas0 will run on a standard, unmodified host. This used to be the rationale for tt mode, but skas0 mode makes it obsolete. At this point, the only reason for tt mode is to see if a UML problem is skas specific. In that case, you'd force UML to run in tt mode and see if the problem persists. Aside from this, MODE_TT can be safely disabled. STATIC_LINK forces the build to produce a statically linked binary. This is an option only when MODE_TT is disabled because a UML kernel with tt mode compiled in must be statically linked. With tt mode absent, the UML kernel is linked dynamically by default. However, as we saw in the last chapter, a statically linked binary is sometimes useful, as it simplifies setting up a chroot jail for UML. NEST_LEVEL makes it possible to run one UML inside another. This requires a configuration option because UML maps code and data into its own process address spaces in tt and skas0 modes. In tt mode, the entire UML kernel is present. In skas0 mode, there are just the two stub pages. When the UML process is another instance of UML, they will both want to load that data at the same location in the address space unless something is done to change that. NEST_LEVEL changes that. The default value is 0. By changing it to 1, you will build a UML that can run inside another UML instance. It will map its data lower in its process address spaces than the outer UML instance, so they won't conflict with each other. This is a total nonissue with skas3 mode since the UML kernel and its processes are in different address spaces. You can run a skas3 UML inside another UML without needing to specially configure either one. HOST_2G_2G is necessary for running a tt or skas0 UML on hosts that have the 2GB/2GB address space split. With this option enabled on the host, the kernel occupies the upper 2GB of the address space rather than the usual 1GB. This is uncommon but is sometimes done when the host kernel needs more address space for itself than the 1GB it gets by default. This allows the kernel to directly access more physical memory without resorting to Highmem. The downside of this is that processes get only the lower 2GB of address space, rather than the 3GB they get normally. Since UML puts some data into the top of its process address spaces in both tt and skas0 modes, it will try to access part of the kernel's address space, which is not allowed. The HOST_2G_2G option makes this data load into the top of a 2GB address space. CMDLINE_ON_HOST is an option that makes UML management on the host slightly easier. In tt mode, UML will make the process names on the host reflect the UML processes running in them, making it easy to see what's happening inside a UML from the host. This is accomplished through a somewhat nasty trick that ensures there is space on the initial UML stack to write this information so that it will be seen on the host. However, this trick, which involves UML changing its arguments and exec-ing itself, confuses some versions of gdb and makes it impossible to debug UML. Since this behavior is specific to tt mode, it is not needed when running in skas mode, even if tt mode support is present in the binary. This option controls whether the exec takes place. Disabling it will disable the nice process names on the host, but those are present only in tt mode anyway. PT_PROXY is a tt modespecific debugging option. Because of the way that UML uses ptrace in tt mode, it is difficult to use gdb to debug it. The tracing thread uses ptrace on all of the other threads, including when they are running in the kernel. gdb uses ptrace in order to control the process it has debugged, and two processes can't simultaneously use ptrace on a single process. In spite of this, it is possible to run gdb on a UML thread, in a clever but fairly nasty way. The UML tracing thread uses ptrace on gdb, intercepting its system calls. It emulates some of them in order to fake gdb into believing that it has successfully attached to a UML process and is controlling it. In reality, gdb isn't attached to or controlling anything. The UML tracing thread is actually controlling the UML thread, intercepting gdb operations and performing them itself. This behavior is enabled with the PT_PROXY operation. It gets its name from the ptrace operation proxying that the UML tracing thread does in order to enable running gdb on a tt mode UML. At runtime, this is invoked with the debug switch. This causes the tracing thread to start an xterm window with the captive gdb running inside it. Debugging a skas mode UML with gdb is much simpler. You can simply start UML under the control of gdb and debug it just as you would any other process. The KERNEL_HALF_GIGS option controls the amount of address space that a tt mode UML takes for its own use. This is similar to the host 2GB/2GB option mentioned earlier, and the motivation is the same. A larger kernel virtual address space allows it to directly access more physical memory without resorting to Highmem. The value for this option is an integer, which specifies how many half-gigabyte units of address space that UML will take for itself. The default value is 1increasing to 2 would cause UML to take the upper 1GB, rather than .5GB, for itself. In skas mode, with tt mode disabled, this is irrelevant. Since the kernel is in its own address space, it has a full process address space for its own use, and there's no reason to want to carve out a large chunk of its process address spaces.

Generic UML Options

A number of other configuration options don't depend on UML's execution mode. Some of these are shared with other Linux architectures but differ in interesting ways, while others are unique to UML.

The SMP and NR_CPUS options have the same meaning as with any other architectureSMP controls whether the UML kernel will be able to support multiple processors, and NR_CPUS controls the maximum number of processors the kernel can use. However, SMP on UML is different enough from SMP on physical hardware to warrant a discussion. Having an SMP virtual machine is completely independent from the host being SMP. An SMP UML instance has multiple virtual processors, which do not map directly to physical processors on the host. Instead, they map directly to processes on the host. If the UML instance has more virtual processors than the host has physical processors, the virtual processors will just be multiplexed on the physical ones by the host scheduler. Even if the host has the same or a greater number of processors than the UML instance, it is likely that the virtual processors will get timesliced on physical processors anyway, due to other demands on the host. An SMP UML instance can even be run on a uniprocessor host. This will lose the concurrency that's possible on an SMP host, but it does have its uses. Since having multiple virtual processors inside the UML instance translates into an equal number of potentially running processes on the host, a greater number of virtual processors provides a greater call on the host's CPU consumption. A four-CPU UML instance will be able to consume twice as much host CPU time as a two-CPU instance because it has twice as many processes on the host possibly running. Running an SMP instance on a host with a different number of processors is also useful for kernel debugging. The multiplexing of virtual processors onto physical ones can open up timing holes that wouldn't appear on a physical system. This can expose bugs that would be very hard or impossible to find on physical hardware. NR_CPUS limits the maximum number of processors that an SMP kernel will support. It does so by controlling the size of some internal data structures that have NR_CPUS elements. Making NR_CPUS unnecessarily large will waste some memory and maybe some CPU time by making the CPU caches less effective but is otherwise harmless. The HIGHMEM option also means the same thing as it does on the host. If you need more physical memory than can be directly mapped into the kernel's address space, what's left over must be Highmem. It can't be used for as many purposes as the directly mapped memory, and it must be mapped into the kernel's address space when needed and unmapped when it's not. Highmem is perfect for process memory on the host since that doesn't need to be mapped into the kernel's address space. This is true for tt mode UML instances, as well, since they follow the host's model of having the kernel occupy its process address spaces. However, for skas UML instances, which are in a different address space entirely, kernel access to process memory that has been mapped from the Highmem area is slow. It has to temporarily map the page of memory into its address space before it has access to it. This is one of the few examples of an operation that is faster in tt mode than in skas mode. The mapping operation is also slower for UML than for the host, making the performance cost of Highmem even greater. However, the need for Highmem is less because of the greater amount of physical memory that can be directly mapped into the skas kernel address space. The KERNEL_STACK_ORDER option is UML-specific and is somewhat specialized. It was introduced in order to facilitate running valgrind on UML. valgrind creates larger than normal signal frames, and since UML receives interrupts as signals, signal frames plus the normal call stack have to fit on a kernel stack. With valgrind, they often didn't, due to the increased signal frame size. This was later found to be useful in a few other cases. Some people doing kernel development in UML discovered that their code was overflowing kernel stacks. Increasing the KERNEL_STACK_ORDER parameter is useful in demonstrating that their system crashes are due to stack overflows and not something else, and to allow them to continue working without immediately needing to reduce their stack usage. By default, 3_LEVEL_PGTABLES is disabled on 32-bit architectures and enabled on 64-bit architectures. It is not available to be disabled in the 64-bit case, but it can be enabled for a 32-bit architecture. Doing this provides UML with the capability to access more than 4GB of memory, which is the two-level pagetable limit. This provides a way to experiment with very large physical memory UML instances on 32-bit hosts. However, the portion of this memory that can't be directly mapped will be Highmem, with the performance penalties that I have already mentioned. The UML_REAL_TIME_CLOCK option controls whether time intervals within UML are made to match real time as much as possible. This matters because the natural way for time to progress within a virtual machine is virtuallythat is, time progresses within the virtual machine only when it is actually running on the host. So, if you start a sleep for two seconds inside the UML instance and the host does other things for a few seconds before scheduling the instance, then five seconds or so will pass before the sleep ends. This is correct behavior in a sensethings running within the UML instance will perceive that time flows uniformly, that is, they will see that they can consistently do about the same amount of work in a unit of time. Without this, in the earlier example, a process would perceive the sleep ending immediately because it did no work between the start of the sleep and its end since the host had scheduled something else to run. In another sense, this is incorrect behavior. UML instances often have people interacting with them, and those people exist in real time. When someone asks for a five-second pause, it really should end in five real seconds, not five virtual ones. This behavior has actually broken tests. Some Perl regression tests run timers and fail if they take too long to expire. They measure the time difference by using gettimeofday, which is tied to the host's gettimeofday. When gettimeofday is real time and interval timers are virtual, there is bound to be a mismatch. So, the UML_REAL_TIME_CLOCK option was added to fix this problem. It is enabled by default since that is the behavior that almost everyone wants. However, in some cases it's not desired, so it is a configuration option, rather than hard coded. Intervals are measured by clock ticks, which on UML are timer interrupts from the host. The real-time behavior is implemented by looking at how many ticks should have happened between the last tick and the current one. Then the generic kernel's timer routine is called that many times. This makes the UML clock catch up with the real one, but it does so in spurts. Time stops for a while, and then it goes forward very quickly to catch up. When you are debugging UML, you may have it stopped at a gdb prompt for a long time. In this case, you don't want the UML instance to spend time in a loop calling the timer routine. For short periods of time, this isn't noticeable. However, if you leave the debugger for a number of hours before continuing it, there will be a noticeable pause while the virtual clock catches up with the real one. Another case is when you have a UML instance running on a laptop that is suspended overnight. When you wake it up, the UML instance will spend a good amount of time catching up with the many hours of real time it missed. In this case, the UML instance will appear to be hung until it catches up. If either of these situations happens enough to be annoying, and real-time timers aren't critical, you can disable this option.

Virtual Hardware Options

UML has a number of device drivers, each with its own configuration option. I'm going to mention a few of the less obvious ones here.

The MCONSOLE option enables the MConsole driver, which is required in order to control and configure the instance through an MConsole client. This is on by default and should remain enabled unless you have a good reason to not want it. The MAGIC_SYSRQ option is actually a generic kernel option but is related to MCONSOLE tHRough the MConsole sysrq command. Without MAGIC_SYSRQ enabled, the sysrq command won't work. The UML_RANDOM option enables a "hardware" random number generator for UML. Randomness is often a problem for a server that needs random numbers to seed ssh or https sessions. Desktop machines can rely on the user for randomness, such as the time between keystrokes or mouse movements. Physical servers rely on randomness, such as the time between I/O interrupts, from their drivers, which is sometimes insufficient. Virtual machines have an even harder time since they have fewer sources of randomness than physical machines. It is not uncommon for ssh or https key generation to hang for a while until the UML instance acquires enough randomness. The UML random number generator has access to all of the host's randomness from the host's /dev/random, rather than having to generate it all itself. If the host has problems providing enough random numbers, key generation and other randomness-consuming operations will still hang. But they won't hang for as long as they would without this driver. In order to use this effectively, you need to run the hwrng tools within the UML instance. This package reads randomness from /dev/hwrng, which is attached to this driver, and feeds it into /dev/random, from where the randomness is finally consumed. The MMAPPER option implements a virtual iomem driver. This allows a host file to be used as an I/O area that is mapped into the UML instance's physical memory. This specialized option is mostly used for writing emulated drivers and cluster interconnects. The WATCHDOG and UML_WATCHDOG options implement a "hardware" watchdog for UML. The "hardware" portion of it is a process running outside of UML. This process is started when the watchdog device is opened within the UML instance and communicates with the driver through a pipe. It expects to receive some data through that pipe at least every 60 seconds. This happens when the process inside the UML instance that opened the device writes to it. If the external watchdog process doesn't receive input within 60 seconds, it presumes that the UML instance is hung and takes measures to deal with it. If it was told on its command line that there is an MConsole notify socket, it will send a "hang" notification there. (We saw this in Chapter 8.) Otherwise, it will kill the UML instance itself by sending the main process a sufficiently severe signal to shut it down.

Networking

A number of networking options control how the UML instance can exchange packets with the host and with other UML instances. UML_NET enables UML networkingit must be enabled for any network drivers to be available at all. The rest each control a particular packet transport, and their names should be self-explanatory:

UML_NET_ETHERTAP UML_NET_TUNTAP UML_NET_SLIP UML_NET_DAEMON UML_NET_MCAST UML_NET_PCAP UML_NET_SLIRP

UML_NET and all of the transport options are enabled by default. Disabling ones that will not be needed will save a small amount of code.

Consoles

A similar set of console and serial line options control how they can be connected to devices on the host. Their names should also be self explanatory:

NULL_CHAN PORT_CHAN PTY_CHAN TTY_CHAN XTERM_CHAN

The file descriptor channel, which, by default, the main console uses to attach itself to stdin and stdout, is not configurable. It is always on because people were constantly disabling it and sending mail to the UML mailing lists wondering why UML wouldn't boot.

There is an option, SSL, to enable UML serial line support. Serial lines aren't much different from consoles, so having them doesn't do much more than add some variety to the device names through which you can attach to a UML instance.

Finally, the default settings for console zero, the rest of the consoles, and the serial lines are all configurable. These values are strings, and describe what host device the UML devices should be attached to. These, and their default values, are as follows:

CON_ZERO_CHAN0,fd:1 CON_CHANxterm SSL_CHANpty

Debugging

I talked about a number of debugging options in the context of tt mode already since they are specific to tt mode. A few others allow UML to be profiled by the gprof and gcov tools. These work only in skas mode since a tt mode UML instance breaks assumptions made by them.

The GPROF option enables gprof support in the UML build, and the GCOV option similarly enables gcov support. These change the compilation flags so as to tell the compiler to generate the code needed for the profiling. In the case of gprof, the generated code tracks procedure calls and keeps statistical information about where the UML instance is spending its time. The code generated for gcov tracks what blocks of code have been executed and how many times they were executed.

A UML profiling run is just like any other process. You start it, exercise it for a while, stop it, and generate the statistics you want. In the case of UML, the profiling starts when UML boots and ends when it shuts down. Running gprof or gcov after that is exactly like running it on any other application.

Compilation

Now that the UML has been configured, it is time to build it. On 2.6 hosts, we need to take care of one more detail. If the UML instance is to be built to use AIO support on the host, a header file, include/ linux/aio_abi.h in the UML tree, must be copied to /usr/ include/linux/aio_abi.h on the host.

With this taken care of, building UML is as simple as this:

If you have built Linux kernels before, you will see that the UML build is very similar to what you have seen before. When it finishes, you will get two identical files, called vmlinux and linux. In fact, they are hard links to the same file. Traditionally, the UML build produced a file called linux rather than the vmlinux or vmlinuz that the kernel build normally produces. I did this on purpose, believing that having the binary be named linux was more intuitive than vmlinux or vmlinuz.

This was true, and most people like the name, but some kernel hackers are very used to an output file named vmlinux. Also, the kernel build became stricter over time, and it became very hard to avoid having a final binary named vmlinux. So, I made the UML build produce the vmlinux file, and as a final step, link the name linux to that file. This way, everyone is happy.

Chapter 12. Specialized UML Configurations

So far we have seen UML instances with fairly normal virtual hardware configurationsthey are similar to common physical machines. Now we will look at using UML to emulate unusual configurations that can't even be approached with common hardware. This includes configurations with lots of devices, such as block devices and network interfaces, many CPUs, and very large physical memory, and more specialized configurations, such as clusters.

By virtualizing hardware, UML makes it easy to simulate these configurations. Virtual devices can be constructed as long as host and UML memory hold out and no built-in software limits are reached. There are no constraints such as those based on the number of slots on a bus or the number of buses on a system.

UML can also emulate hardware you might not even have one instance of. We'll see an example of this when we build a cluster, which will need a shared storage device. Physically, this is a disk that is some-how multiported, either because it is multiported itself or because it's on a shared bus. Either way, this is an expensive, noncommodity device. However, with UML, a shared device is simply a file on the host to which multiple UML instances have access.

Large Numbers of Devices

We'll start by configuring a UML instance with a large number of devices. The reasons for wanting to do this vary. For many people, there is value in looking at /proc/meminfo and seeing an absurdly large amount of memory, or running df and seeing more disk space than you could fit in a room full of disks.

More seriously, it allows you to explore the scalability limits of the Linux kernel and the applications running on it. This is useful when you are maintaining some software that may run into these limits, and your users may have hardware that may do so, but you don't. You can emulate the large configuration to see how your software reacts to it.

You may also be considering acquiring a very large machine but want to know whether it is fully usable by Linux and the applications you envision running on it. UML will let you explore the software limitations. Obviously, any hardware limitations, such as the number of bus slots and controllers and the like, can't be explored in this way.

Network Interfaces

Let's start by configuring a pair of UML instances with a large number of network interfaces. We will boot the two instances, debian1 and debian2, and hot-plug the interfaces into them. So, with the UML instances booted, you do this as follows:

host% for i in `seq 0 127`; do uml_mconsole debian1 \

config eth$i=mcast,,224.0.0.$i; done

host% for i in `seq 0 127`; do uml_mconsole debian2 \

config eth$i=mcast,,224.0.0.$i; done

These two lines of shell configure 128 network interfaces in each UML instance. You'll see a string of OK messages from each of these, plus a lot of console output in the UML instances if kernel output is logged there. Running dmesg in one of the instances will show you something like this:

Netdevice 124 : mcast backend multicast address: \

224.0.0.124:1102, TTL:1

Configured mcast device: 224.0.0.125:1102-1

Netdevice 125 : mcast backend multicast address: \

224.0.0.125:1102, TTL:1

Configured mcast device: 224.0.0.126:1102-1

Netdevice 126 : mcast backend multicast address: \

224.0.0.126:1102, TTL:1

Configured mcast device: 224.0.0.127:1102-1

Netdevice 127 : mcast backend multicast address: \

224.0.0.127:1102, TTL:1

Running ifconfig inside the UML instances will confirm that interfaces eth0 through etH127 now exist. If you're brave, run ifconfig -a. Otherwise, just do some spot-checking:

UML# ifconfig eth120

eth120 Link encap:Ethernet HWaddr 00:00:00:00:00:00

BROADCAST MULTICAST MTU:1500 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 \

frame:0

TX packets:0 errors:0 dropped:0 overruns:0 \

carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 b) TX bytes:0 (0.0 b)

Interrupt:5

This indicates that we indeed have the network interfaces we asked for. I configured them to attach to multicast networks on the host, so they will be used purely to network between the two instances. They can't talk directly to the outside network unless you configure one of the instances with an interface attached to a TUN/TAP device and use it as a gate way. Each of an instance's interfaces is attached to a different host multicast address, which means they are on different networks. So, taken in pairs, the corresponding interfaces on the two instances are on the same network and can communicate with each other.

For example, the two eth0 interfaces are both attached to the host multicast IP address 224.0.0.0 and thus will see each other's packets. The two etH1 interfaces are on 224.0.0.1 and can see each other's packets, but they won't see any packets from the eth0 interfaces.

Next, we configure the interfaces inside the UML instances. I'm going to put each one on a different network in order to correspond to the connectivity imposed by the multicast configuration on the host. The eth0 interfaces will be on the 10.0.0.0/24 network, the etH1 interfaces will be on the 10.0.1.0/24 network, and so forth:

UML1# for i in `seq 0 127`; do ifconfig eth$i 10.0.$i.1/24 up; done

UML2# for i in `seq 0 127`; do ifconfig eth$i 10.0.$i.2/24 up; done

Now the interfaces in the first UML instance are running and have the .1 addresses in their networks, and the interfaces in the second instance have the .2 addresses. Again, some spot-checking will confirm this:

UML1# ifconfig eth75

eth75 Link encap:Ethernet HWaddr FE:FD:0A:00:4B:01

inet addr:10.0.75.1 Bcast:10.255.255.255 \

Mask:255.0.0.0

UP BROADCAST RUNNING MULTICAST MTU:1500 \

Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 \

frame:0

TX packets:0 errors:0 dropped:0 overruns:0 \

carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 b) TX bytes:0 (0.0 b)

Interrupt:5

UML2# ifconfig eth100

eth100 Link encap:Ethernet HWaddr FE:FD:0A:00:64:02

inet addr:10.0.100.2 Bcast:10.255.255.255 \

Mask:255.0.0.0

UP BROADCAST RUNNING MULTICAST MTU:1500 \

Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 \

frame:0

TX packets:0 errors:0 dropped:0 overruns:0 \

carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 b) TX bytes:0 (0.0 b)

Interrupt:5

Let's see if the interfaces work:

UML1# ping 10.0.50.2

PING 10.0.50.2 (10.0.50.2): 56 data bytes

64 bytes from 10.0.50.2: icmp_seq=0 ttl=64 time=56.3 ms

64 bytes from 10.0.50.2: icmp_seq=1 ttl=64 time=15.7 ms

64 bytes from 10.0.50.2: icmp_seq=2 ttl=64 time=16.6 ms

64 bytes from 10.0.50.2: icmp_seq=3 ttl=64 time=14.9 ms

64 bytes from 10.0.50.2: icmp_seq=4 ttl=64 time=16.4 ms

--- 10.0.50.2 ping statistics ---

5 packets transmitted, 5 packets received, 0% packet loss

round-trip min/avg/max = 14.9/23.9/56.3 ms

You can try some of the others by hand or check all of them with a bit of shell such as this:

UML1# for i in `seq 0 127`; do ping -c 1 10.0.$i.2 ; done

This exercise is fun and interesting, but what's the practical use? We have demonstrated that there appears to be no limit, aside from memory, on how many network interfaces Linux will support. To tell for sure, we would need to look at the kernel source. But if you are seriously asking this sort of question, you probably have some hardware limit in mind, and setting up some virtual machines is a quick way to tell whether the operating system or the networking tools have a lower limit.

By poking around a bit more, we can see that other parts of the system are being exercised. Taking a look at the routing table will show you one route for every device we configured. An excerpt looks like this:

UML1# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric \

Ref Use Iface

10.0.20.0 0.0.0.0 255.255.255.0 U 0 \

0 0 eth20

10.0.21.0 0.0.0.0 255.255.255.0 U 0 \

0 0 eth21

10.0.22.0 0.0.0.0 255.255.255.0 U 0 \

0 0 eth22

10.0.23.0 0.0.0.0 255.255.255.0 U 0 \

0 0 eth23

This would be interesting if you wanted a large number of networks, rather than simply a large number of interfaces.

Similarly, we are exercising the arp cache more than usual. Here is an excerpt:

UML# arp -an

? (10.0.126.2) at FE:FD:0A:00:7E:02 [ether] on eth126

? (10.0.64.2) at FE:FD:0A:00:40:02 [ether] on eth64

? (10.0.110.2) at FE:FD:0A:00:6E:02 [ether] on eth110

? (10.0.46.2) at FE:FD:0A:00:2E:02 [ether] on eth46

? (10.0.111.2) at FE:FD:0A:00:6F:02 [ether] on eth111

This all demonstrates that, if there are any hard limits in the Linux networking subsystem, they are reasonably high. A related but different question is whether there are any problems with performance scaling to this many interfaces and networks. If you are concerned about this, you probably have a particular application or workload in mind and would do well to run it inside a UML instance, varying the number of interfaces, networks, routes, or whatever its performance depends on.

For demonstration purposes, since I lack such a workload, I will use standard system tools to see how well performance scales as the number of interfaces increases.

Let's look at ping times as the number of interfaces increases. I'll shut down all of the Ethernet devices and bring up an increasing number on each test. The first two rounds look like this:

UML# export n=0 ; for i in `seq 0 $n`; \

do ifconfig eth$i 10.0.$i.1/24 up; done ; \

for i in `seq 0 $n`; do ping -c 2 10.0.$i.2 ; done ; \

for i in `seq 0 $n`; do ifconfig eth$i down ; done

PING 10.0.0.2 (10.0.0.2): 56 data bytes

64 bytes from 10.0.0.2: icmp_seq=0 ttl=64 time=36.0 ms

64 bytes from 10.0.0.2: icmp_seq=1 ttl=64 time=4.9 ms

--- 10.0.0.2 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 4.9/20.4/36.0 ms

UML# export n=1 ; for i in `seq 0 $n`; \

do ifconfig eth$i 10.0.$i.1/24 up; done ; \

for i in `seq 0 $n`; do ping -c 2 10.0.$i.2 ; \

done ; for i in `seq 0 $n`; do ifconfig eth$i down ; done

PING 10.0.0.2 (10.0.0.2): 56 data bytes

64 bytes from 10.0.0.2: icmp_seq=0 ttl=64 time=34.0 ms

64 bytes from 10.0.0.2: icmp_seq=1 ttl=64 time=4.9 ms

--- 10.0.0.2 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 4.9/19.4/34.0 ms

PING 10.0.1.2 (10.0.1.2): 56 data bytes

64 bytes from 10.0.1.2: icmp_seq=0 ttl=64 time=35.4 ms

64 bytes from 10.0.1.2: icmp_seq=1 ttl=64 time=5.0 ms

--- 10.0.1.2 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 5.0/20.2/35.4 ms

The two-interface ping times are essentially the same as the one-interface times. We are looking at how the times change, rather than their actual values compared to ping times on the host. A virtual machine will necessarily have different performance characteristics than a physical one, but they should scale similarly.

We see the first ping taking much longer than the second because of the arp request and response that have to occur before any ping requests can be sent out. The sending system needs to determine the Ethernet MAC address corresponding to the IP address you are pinging. This requires an arp request to be broadcast and a reply to come back from the target host before the actual ping request can be sent. The second ping time measures the actual time of a ping round trip.

I won't bore you with the full output of repeating this, doubling the number of interfaces at each step. However, this is typical of the times I got with 128 interfaces:

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 6.7/22.7/38.8 ms

PING 10.0.123.2 (10.0.123.2): 56 data bytes

64 bytes from 10.0.123.2: icmp_seq=0 ttl=64 time=39.1 ms

64 bytes from 10.0.123.2: icmp_seq=1 ttl=64 time=8.9 ms

--- 10.0.123.2 ping statistics ---

With 128 interfaces, both ping times are around 4 ms greater than with one. This suggests that the slowdown is in the IP routing code since this is exercised once for each packet. The arp requests don't go through the IP stack, so they wouldn't be affected by any slowdowns in the routing code.

The 4-ms slowdown is comparable to the fastest ping time, which was around 5 ms, suggesting that the routing overhead with 128 networks and 128 routes is comparable to the ping round trip time.

In real life, you're unlikely to be interested in how fast pings go when you have a lot of interfaces, routes, arp table entries, and so on. You're more likely to have a workload that needs to operate in an environment with these sorts of scalability requirements. In this case, instead of running pings with varying numbers of interfaces, you'd run your workload, changing the number of interfaces as needed, and make sure it behaves acceptably within the range you plan for your hardware.

Memory

Memory is another physical asset that a system may have a lot of. Even though it's far cheaper than it used to be, outfitting a machine with many gigabytes is still fairly pricy. You may still want to emulate a large-memory environment before splashing out on the actual physical article. Doing so may help you decide whether your workload will benefit from having lots of memory, and if so, how much memory you need. You can determine your memory sweet spot so you spend enough on memory but not too much.

You may have guessed by now that we are going to look at large-memory UML instances, and you'd be right. To start with, here is /proc/meminfo from a 64GB UML instance:

UML# more /proc/meminfo

MemTotal: 65074432 kB

MemFree: 65048744 kB

Buffers: 824 kB

Cached: 9272 kB

SwapCached: 0 kB

Active: 5252 kB

Inactive: 6016 kB

HighTotal: 0 kB

HighFree: 0 kB

LowTotal: 65074432 kB

LowFree: 65048744 kB

SwapTotal: 0 kB

SwapFree: 0 kB

Dirty: 112 kB

Writeback: 0 kB

Mapped: 2772 kB

Slab: 4724 kB

CommitLimit: 32537216 kB

Committed_AS: 4064 kB

PageTables: 224 kB

VmallocTotal: 137370258416 kB

VmallocUsed: 0 kB

VmallocChunk: 137370258416 kB

This output is from an x86_64 UML on a 1GB host. Since x86_64 is a 64-bit architecture, there is plenty of address space for UML to map many gigabytes of physical memory. In contrast, x86, as a 32-bit architecture, doesn't have sufficient address space to cleanly handle large amounts of memory. On x86, UML must use the kernel's Highmem support in order to handle greater than about 3GB of physical memory. This works, but, as I discussed in Chapter 9, there's a large performance penalty to pay because of the requirement to map the high memory into low memory where the kernel can directly access it.

On an x86 UML instance, the meminfo output would have a large amount of Highmem in the HighTotal and HighFree fields. On 64-bit hosts, this is unnecessary, and all the memory appears as LowTotal and LowFree. The other unusual feature here is the even larger amount of vmalloc space, 137 terabytes. This is simply the address space that the UML instance doesn't have any other use for.

There has to be more merit to large-memory UML instances than impressive numbers in /proc/meminfo. That's enough for me, but other people seem to be more demanding. A more legitimate excuse for this sort of exercise is to see how the performance of a workload or application will change when given a large amount of memory.

In order to do this, we need to be able to judge the performance of a workload in a given amount of memory. On a physical machine, this would be a matter of running it and watching the clock on the nearest wall. Having larger amounts of memory improves performance by allowing more data to be stored in memory, rather than on disk. With insufficient memory, the system has to swap data to disk when it's unused and swap it back in when it is referenced again. Some intelligent applications, such as databases, do their own caching based on the amount of memory in the system. In this case, the trade-off is usually still against storing data in memory. For example, a database will read more index data from disk when it has enough memory, speeding lookups.

In the example above, the 64GB UML instance is running on a 1GB host. It's obviously not manufacturing 63GB of memory, so that extra memory is ultimately backed by disk. You can run applications that consume large amounts of memory, and the UML instance will not have to use its own swap. However, since this will exceed the amount of memory on the host, it will start swapping. This means you can't watch the clock in order to decide how your workload will perform with a lot of memory available.

Instead, you need to find a proxy for performance. A proxy is a measurement that can stand in for the thing you are really interested in when that thing can't be measured directly. I've been talking about disk I/O, either by the system swapping or by the application reading in data on its own. So, watching the UML instance's disk I/O is a good way to decide whether the workload's performance will improve. The greater the decrease in disk traffic, the greater the performance improvement you can expect.

As with increasing amounts of any resource, there will be a point of diminishing returns, where adding an increment of memory results in a smaller performance increase than the previous increment did. Graphing performance against memory will typically show a relatively narrow region where the performance levels off. It may still increase, but suddenly at a slower rate than before. This performance "knee" is usually what you aim at when you design a system. Sometimes the knee is too expensive or is unattainable, and you add as much memory as you can, accepting a performance point below the knee. In other cases, you need as much performance as you can get, and you accept the diminishing performance returns with much of the added memory.

As before, I'm going to use a little fake workload in order to demonstrate the techniques involved. I will create a database-like workload with a million small files. The file metadatathe file names, sizes, modification dates, and so onwill stand in for the database indexes, and their contents will stand in for the actual data. I need such a large number of files so that their metadata will occupy a respectable amount of memory. This will allow us to measure how changing the amount of system memory impacts performance when searching these files.

The following procedure creates the million files in three stages, increasing the number by a factor of 100 at each step:

First, copy 1024 characters from /etc/passwd into the file 0 and make 99 copies of it in the files 1 through 99. Next, create a subdirectory, move those files into it, and make 99 copies, creating 10,000 files. Repeat this, creating 99 more copies of the current directory, leaving us with a million files, containing 1024 characters apiece.

UML# mkdir test

UML# cd test

UML# dd if=/etc/passwd count=1024 bs=1 > 0

1024+0 records in

1024+0 records out

UML# for n in `seq 99` ; do cp 0 $n; done

UML# ls

1 14 19 23 28 32 37 41 46 50 55 6 \

64 69 73 78 82 87 91 96

10 15 2 24 29 33 38 42 47 51 56 60 \

65 7 74 79 83 88 92 97

11 16 20 25 3 34 39 43 48 52 57 61 \

66 70 75 8 84 89 93 98

12 17 21 26 30 35 4 44 49 53 58 62 \

67 71 76 80 85 9 94 99

13 18 22 27 31 36 40 45 5 54 59 63 \

68 72 77 81 86 90 95 0

UML# mkdir a

UML# mv * a

mv: cannot move `a' to a subdirectory of itself, `a/a'

UML# mv a 0

UML# for n in `seq 99` ; do cp -a 0 $n; done

UML# mkdir a

UML# mv * a

mv: cannot move `a' to a subdirectory of itself, `a/a'

UML# mv a 0

UML# for n in `seq 99` ; do cp -a 0 $n; done

Now let's reboot in order to get some clean memory consumption data. On reboot, log in, and look at /proc/diskstats in order to see how much data was read from disk during the boot:

UML# cat /proc/diskstats

98 0 ubda 375 221 18798 2860 55 111 1328 150 0 2740 3010

The sixth field (18798, in this case) is the number of sectors read from the disk so far. With 512-byte sectors, this means that the boot read around 9.6MB (9624576 bytes, to be exact).

Now, to see how much memory we need in order to search the metadata of the directory hierarchy, let's run a find over it:

UML# cd test

UML# find. > /dev/null

Let's look at diskstats again, using awk to pick out the correct field so as to avoid taxing our brains by having to count up to six:

UML# awk '{ print $6 }' /proc/diskstats

214294

UML# echo $[ (214214 - 18798) * 512 ]

100052992

This pulled in about 100MB of disk space. Any amount of memory much more than that will be plenty to hold all of the metadata we will need. To check this, we can run the find again and see that there isn't much disk input:

UML# awk '{ print $6 }' /proc/diskstats

215574

UML# find. > /dev/null

UML# awk '{ print $6 }' /proc/diskstats

215670

So, there wasn't much disk I/O, as expected.

To see how much total memory would be required to run this little workload, let's look at /proc/meminfo:

UML# grep Mem /proc/meminfo

MemTotal: 1014032 kB

MemFree: 870404 kB

A total of 143MB of memory has been consumed so far. Anything over that should be able to hold the full set of metadata. We can check this by rebooting with 160MB of physical memory:

UML# cd test

UML# awk '{ print $6 }' /proc/diskstats

18886

UML# find . > /dev/null

UML# awk '{ print $6 }' /proc/diskstats

215390

UML# find . > /dev/null

UML# awk '{ print $6 }' /proc/diskstats

215478

UML# grep Mem /proc/meminfo

MemTotal: 156276 kB

MemFree: 15684 kB

This turns out to be correct. We had essentially no disk reads on the second search and pretty close to no free memory afterward.

We can check this by booting with a lot less memory and seeing if there is a lot more disk activity on the second find. With an 80MB UML instance, there was about 90MB of disk activity between the two searches. This indicates that 80MB was not enough memory for optimal performance in this case, and a lot of data that was cached during the first search had to be discarded and read in again during the second. On a physical machine, this would result in a significant performance loss. On a virtual machine, it wouldn't necessarily, depending on how well the host is caching data. Even if the UML instance is swapping, the performance loss may not be nearly as great as on a physical machine. If the host is caching the data that the UML instance is swapping, then swapping the data back in to the UML instance involves no disk activity, in contrast to the case with a physical machine. In this case, swapping would result in a performance loss for the UML instance, but a lot less than you would expect for a physical system.

We measured the difference between an 80MB UML instance and a 160MB one, which are very far from the 64MB instance with which I started. These memory sizes are easily reached with physical systems today (it would be hard to buy a system with less than many times as much memory as this), and this difference could easily have been tested on a physical system.

To get back into the range of memory sizes that aren't so easily reached with a physical machine, we need to start searching the data. My million files, plus the rest of the files that were already present, occupy about 6.5GB.

With a 1GB UML instance, there are about 5.5GB of disk I/O on the first search and about the same on the second, indicating that this is not nearly enough memory and that there is less actual data being read from the disk than df would have us believe:

UML# awk '{ print $6 }' /proc/diskstats

18934

UML# find . -xdev -type f | xargs cat > /dev/null

UML# awk '{ print $6 }' /proc/diskstats

11033694

UML# find . -xdev -type f | xargs cat > /dev/null

UML# awk '{ print $6 }' /proc/diskstats

22050006

UML# echo $[ (11033694 - 18934) * 512 ]

5639557120

UML# echo $[ (22050006 - 11033694) * 512 ]

5640351744

With a 4GB UML instance, we might expect the situation to improve, but with still a noticeable amount of disk activity on the second search.

UML# awk '{ print $6 }' /proc/diskstats

89944

UML# find / -xdev -type f | xargs cat > /dev/null

UML# awk '{print $6}' /proc/diskstats

13187496

UML# echo $[ 13187496 * 512 ]

6751997952

UML# awk '{print $6}' /proc/diskstats

26229664

UML# echo $[ (26229664 - 13187496) * 512 ]

6677590016

Actually, there is no improvementthere was just as much input during the second search as during the first. In retrospect, this shouldn't be surprising. While a lot of the data could have been cached, it wasn't because the kernel had no way to know that it was going to be used again. So, the data was thrown out in order to make room for data that was read in later.

In situations like this, the performance knee is very sharpyou may see no improvement with increasing memory until the workload's entire data set can be held in memory. At that point, there will likely be a very large performance improvement. So, rather than the continuous performance curve you might expect, you would get something more like a sudden jump at the magic amount of memory that holds all of the data the workload will need.

We can check this by booting a UML instance with more than about 6.5GB of memory. Here are the results with a 7GB instance:

UML# awk '{print $6}' /proc/diskstats

19928

UML# find / -xdev -type f | xargs cat > /dev/null

UML# awk '{print $6}' /proc/diskstats

13055768

UML# echo $[ (13055768 - 19928) * 512 ]

6674350080

UML# find / -xdev -type f | xargs cat > /dev/null

UML# awk '{print $6}' /proc/diskstats

14125882

UML# echo $[ (14125882 - 13055768) * 512 ]

547898368

We had about a half gigabyte of data read in from disk on the second run, which I don't really understand. However, this is far less than we had with the smaller memory instances. On a physical system, this would have translated into much better performance. The UML instance didn't run any faster with more memory because real time is going to depend on real resources. The real resource in this case is physical memory on the host, which was the same for all of these tests. In fact, the larger memory instances performed noticeably worse than the smaller ones. The smallest instance could just about be held in the host's memory, so its disk I/O was just reading data on behalf of the UML instance. The larger instances couldn't be held in the host's memory, so there was that I/O, plus the host had to swap a large amount of the instance itself in and out.

This emphasizes the fact that, in measuring performance as you adjust the virtual hardware, you should not look at the clock on the wall. You should find some quantity within the UML instance that will correlate with performance of a physical system with that hardware running the same workload. Normally, this is disk I/O because that's generally the source for all the data that's going to fill your memory. However, if the data is coming from the network, and increasing memory would be expected to reduce network use, then you would look at packet counts rather than disk I/O.

If you were doing this for real in order to determine how much memory your workload needs for good performance, you wouldn't have created a million small files and run find over them. Instead, you'd copy your actual workload into a UML instance and boot it with varying amounts of memory. A good way to get an approximate number for the memory it needs is to boot with a truly large amount of memory, run the workload, and see how much data was read from disk. A UML instance with that amount of memory, plus whatever it needs during boot, will very likely not need to swap out any data or read anything twice.

However, this approximation may overstate the amount of memory you need for decent performancea good amount of it may be holding data that is not important for performance. So, it would also be a good idea, after checking this first amount of memory to see that it gives you good performance, to decrease the memory size until you see an increase in disk reads. At this point, the UML instance can't hold all of the data that is needed for good performance.

This, plus a bit more, is the amount of memory you should aim at with your physical system. There may be reasons it can't be reached, such as it being too expensive or the system not being able to hold that much. In this case, you need to accept lower than optimal performance, or take some more radical steps such as reworking the application to require less memory or spreading it across several machines, as with a cluster. You can use UML to test this, as well.

Clusters

Clusters are another area where we are going to see increasing amounts of interest and activity. At some point, you may have a situation where you need to know whether your workload would benefit in some way from running on a cluster.

I am going to set up a small UML cluster, using Oracle's ocfs2 to demonstrate it. The key part of this, which is not common as hardware, is a shared storage device. For UML, this is simply a file on the host that multiple UML instances can share. In hardware, this would require a shared bus of some sort, which you quite likely don't have and which would be expensive to buy, especially for testing. Since UML requires only a file on the host, using it for cluster experiments is much more convenient and less expensive.

Getting Started

First, since ocfs2 is somewhat experimental (it is in Andrew Morton's-mm TRee, not in Linus' mainline tree at this writing), you will likely need to reconfigure and rebuild your UML kernel. Second, procedures for configuring a cluster may change, so I recommend getting Oracle's current documentation. The user guide is available from http://oss.oracle.com/projects/ocfs2/.

The ocfs2 configuration script requires that everything related toocfs2 be built as modules, rather than just being compiled into the kernel. This means enabling ocfs2 (in the Filesystems menu) andconfigfs (which is the "Userspace-driven configuration filesystem" item in the Pseudo Filesystems submenu). These options both need to be set to "M."

After building the kernel and modules, you need to copy the modules into the UML filesystem you will be using. The easiest way to do this is to loopback-mount the filesystem on the host (at ./rootfs, in this example) and install the modules into it directly:

host% mkdir rootfs

host# mount root_fs.cluster rootfs -o loop

host# make modules_install INSTALL_MOD_PATH=`pwd`/rootfs

INSTALL fs/configfs/configfs.ko

INSTALL fs/isofs/isofs.ko

INSTALL fs/ocfs2/cluster/ocfs2_nodemanager.ko

INSTALL fs/ocfs2/dlm/ocfs2_dlm.ko

INSTALL fs/ocfs2/dlm/ocfs2_dlmfs.ko

INSTALL fs/ocfs2/ocfs2.ko

host# umount rootfs

You can also install the modules into an empty directory, create a tar file of it, copy that into the running UML instance over the network, and untar it, which is what I normally do, as complicated as it sounds.

Once you have the modules installed, it is time to set things up within the UML instance. Boot it on the filesystem you just installed the modules into, and log into it. We need to install the ocfs2 utilities, which I got from http://oss.oracle.com/projects/ocfs2-tools/. There's a Down-loads link from which the source code is available. You may wish to see if your UML root filesystem already has the utilities installed, in which case you can skip down to setting up the cluster configuration file.

My system doesn't have the utilities, so, after setting up the network, I grabbed the 1.1.2 version of the tools:

UML# wget http://oss.oracle.com/projects/ocfs2-tools/dist/\

files/source/v1.1/ocfs2-tools-1.1.2.tar.gz

UML# gunzip ocfs2-tools-1.1.2.tar.gz

UML# tar xf ocfs2-tools-1.1.2.tar

UML# cd ocfs2-tools-1.1.2

UML# ./configure

I'll spare you the configure output; I had to install a few packages, such as e2fsprogs-devel (for libcom_err.so ), readline-devel, and glibc2-devel. I didn't install the python development package, which is needed for the graphical ocfs2console. I'll be demonstrating everything on the command line, so we won't need that.

After configuring ocfs2, we do the usual make and install :

UML# make && make install

install will put things under /usr/local unless you configured it differently.

At this point, we can do some basic checking by looking at the cluster status and loading the necessary modules. The guide I'm reading refers to the control script as /etc/init.d/o2cb, which I don't have. Instead, I have ./vendor/common/o2cb.init in the source directory, which seems to behave as the fictional /etc/init.d/o2cb.

UML# ./vendor/common/o2cb.init status

Module "configfs": Not loaded

Filesystem "configfs": Not mounted

Module "ocfs2_nodemanager": Not loaded

Module "ocfs2_dlm": Not loaded

Module "ocfs2_dlmfs": Not loaded

Filesystem "ocfs2_dlmfs": Not mounted

Nothing is loaded or mounted. The script makes it easy to change this:

UML# ./vendor/common/o2cb.init load

Loading module "configfs": OK

Mounting configfs filesystem at /config: OK

Loading module "ocfs2_nodemanager": OK

Loading module "ocfs2_dlm": OK

Loading module "ocfs2_dlmfs": OCFS2 User DLM kernel \

interface loaded

OK

Mounting ocfs2_dlmfs filesystem at /dlm: OK

We can check that the status has now changed:

UML# ./vendor/common/o2cb.init status

Module "configfs": Loaded

Filesystem "configfs": Mounted

Module "ocfs2_nodemanager": Loaded

Module "ocfs2_dlm": Loaded

Module "ocfs2_dlmfs": Loaded

Filesystem "ocfs2_dlmfs": Mounted

Everything looks good. Now we need to set up the cluster configuration file. There is a template in documentation/samples/clus-ter.con, which I copied to /etc/ocfs2/cluster.conf after creating /etc/ocfs2 and which I modified slightly to look like this:

UML# cat /etc/ocfs2/cluster.conf

node:

ip_port = 7777

ip_address = 192.168.0.253

number = 0

name = node0

cluster = ocfs2

node:

ip_port = 7777

ip_address = 192.168.0.251

number = 1

name = node1

cluster = ocfs2

cluster:

node_count = 2

name = ocfs2

The one change I made was to alter the IP addresses to what I intend to use for the two UML instances that will form the cluster. You should use IP addresses that work on your network.

The last thing to do before shutting down this instance is to create the mount point where the cluster filesystem will be mounted:

Shut this instance down, and we will boot the cluster, after taking care of one last item on the hostcreating the device that the cluster nodes will share:

host% dd if=/dev/zero of=ocfs seek=$[ 100 * 1024 ] bs==1K count=1

Booting the Cluster

Now we boot two UML instances on COW files with the filesystem we just used as their backing file. So, rather than using ubda=rootfs as we had before, we will use ubda=cow.node0, rootfs and ubda=cow.node1,rootfs for the two instances, respectively. I am also giving them umid s of node0 and node1 in order to make them easy to reference with uml_mconsole later.

The reason for mostly configuring ocfs2, shutting the UML instance down, and then starting up the cluster nodes is that the filesystem changes we made, such as installing the ocfs2 tools and the configuration file, will now be visible in both instances. This saves us from having to do all of the previous work twice.

With the two instances running, we need to give them their separate identities. The cluster.conf file specifies the node names as node0 and node1. We now need to change the machine names of the two instances to match these. In Fedora Core 4, which I am using, the names are stored in /etc/sysconfig/network. The node part of the value of HOSTNAME needs to be changed in one instance to node0 and in the other to node1. The domain name can be left alone.

We need to set the host name by hand since we changed the configuration file too late:

and

Next, we need to bring up the network for both instances:

host% uml_mconsole node0 config eth0=tuntap,,,192.168.0.254

OK

host% uml_mconsole node1 config eth0=tuntap,,,192.168.0.252

OK

When configuring eth0 within the instances, it is important to assign IP addresses as specified in the cluster.conf file previously. In my example above, node0 has IP address 192.168.0.253 andnode1 has address 192.168.0.251 :

UML1# ifconfig eth0 192.168.0.253 up

and

UML2# ifconfig eth0 192.168.0.251 up

At this point, we need to set up a filesystem on the shared device, so it's time to plug it in:

host% uml_mconsole node0 config ubdbc=ocfs

and

host% uml_mconsole node1 config ubdbc=ocfs

The c following the device name is a flag telling the block driver that this device will be used as a clustered device, so it shouldn't lock the file on the host. You should see this message in the kernel log after plugging the device:

Not locking "/home/jdike/linux/2.6/ocfs" on the host

Before making a filesystem, it is necessary to bring the cluster up in both nodes:

UML# ./vendor/common/o2cb.init online ocfs2

Loading module "configfs": OK

Mounting configfs filesystem at /config: OK

Loading module "ocfs2_nodemanager": OK

Loading module "ocfs2_dlm": OK

Loading module "ocfs2_dlmfs": OCFS2 User DLM kernel interface loaded

OK

Mounting ocfs2_dlmfs filesystem at /dlm: OK

Starting cluster ocfs2: OK

Now, on one of the nodes, we run mkfs :

mkfs.ocfs2 -b 4K -C 32K -N 8 -L ocfs2-test /dev/ubdb

mkfs.ocfs2 1.1.2-ALPHA

Overwriting existing ocfs2 partition.

(1552,0):__dlm_print_nodes:380 Nodes in my domain \

("CB7FB73E8145436EB93D33B215BFE919"):

(1552,0):__dlm_print_nodes:384 node 0

Filesystem label=ocfs2-test

Block size=4096 (bits=12)

Cluster size=32768 (bits=15)

Volume size=104857600 (3200 clusters) (25600 blocks)

1 cluster groups (tail covers 3200 clusters, rest cover 3200 clusters)

Journal size=4194304

Initial number of node slots: 8

Creating bitmaps: done

Initializing superblock: done

Writing system files: done

Writing superblock: done

Writing lost+found: done

mkfs.ocfs2 successful

This specifies a block size of 4096 bytes, a cluster size of 32768 bytes, a maximum cluster size of eight nodes, and a volume label of ocfs2-test.

At this point, we can mount the device in both nodes, and we have a working cluster:

UML1# mount /dev/ubdb /ocfs2 -t ocfs2

(1618,0):ocfs2_initialize_osb:1165 max_slots for this device: 8

(1618,0):ocfs2_fill_local_node_info:836 I am node 0

(1618,0):__dlm_print_nodes:380 Nodes in my domain \

("B01E29FE0F2F43059F1D0A189779E101"):

(1618,0):__dlm_print_nodes:384 node 0

(1618,0):ocfs2_find_slot:266 taking node slot 0

JBD: Ignoring recovery information on journal

ocfs2: Mounting device (98,16) on (node 0, slot 0)

UML2# mount /dev/ubdb /ocfs2 -t ocfs2

(1442,0):o2net_set_nn_state:417 connected to node node0 \

(num 0) at 192.168.0.253:7777

(1522,0):ocfs2_initialize_osb:1165 max_slots for this device: 8

(1522,0):ocfs2_fill_local_node_info:836 I am node 1

(1522,0):__dlm_print_nodes:380 Nodes in my domain \

("B01E29FE0F2F43059F1D0A189779E101"):

(1522,0):__dlm_print_nodes:384 node 0

(1522,0):__dlm_print_nodes:384 node 1

(1522,0):ocfs2_find_slot:266 taking node slot 1

JBD: Ignoring recovery information on journal

ocfs2: Mounting device (98,16) on (node 1, slot 1)

Now we start to see communication between the two nodes. This is visible in the output from the second mount and in the kernel log of node0 when node1 comes online.

To quickly demonstrate that we really do have a cluster, I will copy a file into the filesystem on node0 and see that it's visible on node1 :

UML1# cd /ocfs2

UML1# cp ~/ocfs2-tools-1.1.2.tar .

UML1# ls -al

total 2022

drwxr-xr-x 3 root root 4096 Oct 14 16:24 .

drwxr-xr-x 28 root root 4096 Oct 14 16:17 ..

drwxr-xr-x 2 root root 4096 Oct 14 16:15 lost+found

-rw-r--r-- 1 root root 2058240 Oct 14 16:24 \

ocfs2-tools-1.1.2.tar

On the second node, I'll unpack the tar file to see that it's really there.

UML2# cd /ocfs2

UML2# ls -al

total 2022

drwxr-xr-x 3 root root 4096 Oct 14 16:15 .

drwxr-xr-x 28 root root 4096 Oct 14 16:18 ..

drwxr-xr-x 2 root root 4096 Oct 14 16:15 lost+found

-rw-r--r-- 1 root root 2058240 Oct 14 16:24 \

ocfs2-tools-1.1.2.tar

UML2# tar xf ocfs2-tools-1.1.2.tar

UML2# ls ocfs2-tools-1.1.2

COPYING aclocal.m4 fsck.ocfs2 mount.ocfs2 \

rpmarch.guess

CREDITS config.guess glib-2.0.m4 mounted.ocfs2 \

runlog.m4

Config.make.in config.sub install-sh o2cb_ctl \

sizetest

MAINTAINERS configure libo2cb ocfs2_hb_ctl \

tunefs.ocfs2

Makefile configure.in libo2dlm ocfs2cdsl \

vendor

Postamble.make debian libocfs2 ocfs2console

Preamble.make debugfs.ocfs2 listuuid patches

README documentation mkfs.ocfs2 python.m4

README.O2CB extras mkinstalldirs pythondev.m4

This is the simplest possible use of a clustered filesystem. At this point, if you were evaluating a cluster as an environment for running an application, you would copy its data into the filesystem, run it on the cluster nodes, and see how it does.

Exercises

For some casual usage here, we could put our users' home directories in the ocfs2 filesystem and experiment with having the same file accessible from multiple nodes. This would be a somewhat advanced version of NFS home directories.

A more advanced project would be to boot the nodes into an ocfs2 root filesystem, making them as clustered as they can be, given only one filesystem. We would need to solve a couple of problems.

The cluster needs to be running before the root filesystem can be mounted. This would require an initramfs image containing the necessary modules, initialization script, and tools. A script within this image would need to bring up the network and run the ocfs2control script to bring up the cluster. The cluster nodes need some private data to give them their separate identities. Part of this is the network configuration and node names. Since the network needs to be operating before the root filesystem can be mounted, some of this information would be in the initramfs image. The rest of the node-private information would have to be provided in files on a private block device. These files would be bind-mounted from this device over a shared file within the cluster file system, like this:

UML# mount --bind /private/network /etc/sysconfig/network

Without having done this myself, I am no doubt missing some other issues. However, none of this seems insurmountable, and it would make a good project for someone wanting to become familiar with setting up and running a cluster.

Other Clusters

I've demonstrated UML's virtual clustering capabilities using Oracle'socfs2. This isn't the only clustering technology availableI chose it because it nicely demonstrates the use of a host file to replace an expensive piece of hardware, a shared disk. Other Linux cluster filesystems include Lustre from CFS, GFS from Red Hat, and, with a generous definition of clustering, NFS.

Further, filesystems aren't the only form of clustering technology that exists. Clustering technologies have a wide range, from simple failover, high-availability clusters to integrated single-system image clusters, where the entire cluster looks and acts like a single machine.

Most of these run with UML, either because they are architecture-independent and will run on any architecture that Linux supports, or because they are developed using UML and are thus guaranteed to run with UML. Many satisfy both conditions.

If you are looking into using clusters because you have a specific need or are just curious about them, UML is a good way to experiment. It provides a way to bring multiple nodes up without needing multiple physical machines. It also lets you avoid buying exotic hardware that the clustering technology may require, such as the shared storage required by ocfs2. UML makes it much more convenient and less expensive to bring in multiple clustering technologies and experiment with them in order to determine which one best meets your needs.

UML as a Decision-Making Tool for Hardware

In this chapter, I demonstrated the use of UML in simulating hardware that is difficult or expensive to acquire in order to make decisions about both software and hardware. By simulating a system with a great deal of devices of a particular sort, it is possible to probe the limits of the software you might run on such a machine. These limits could involve either the kernel or applications. By running the software stack on an appropriately configured UML instance, you can see whether it is going to have problems before you buy the hardware.

I demonstrated this with a UML instance with a very large number of Ethernet interfaces and some with varying amounts of memory, up to 64GB. The same could have been done with a number of other types of devices, such as CPUs and disks.

With memory, the objective was to analyze the memory requirements of a particular workload without actually having a physical system with the requisite memory in it. You must be careful about doing performance measurements in this case. Looking at wall-clock time is useless because real time will be controlled by the availability of real resources, such as physical memory in the host. A proxy for real time is needed, and when memory is concerned, disk I/O inside the virtual machine is usually a good choice.

The UML instance will act as though it has the memory that was configured on the command line, and the host will swap as necessary in order to maintain that illusion. Therefore, the virtual machine will explicitly swap only when that illusory physical memory is exhausted. A physical machine with that amount of memory will behave in the same way, so a lower amount of disk I/O in the virtual machine will translate into lower real time for the workload on a physical machine.

Finally, I demonstrated the configuration of a cluster of two UML instances. This substituted the use of a host file, rather than a shared disk device, as the cluster interconnect. The ability to substitute a free virtual resource for an expensive physical one is a good reason to prototype a cluster virtually before committing to a physical one. You can see whether your workload will run on a cluster, and if so, how well, with the earlier caveats about making performance measurements.

In a number of ways, a virtual machine is a useful tool for helping you make decisions about software intended to run on physical hardware and about the hardware itself. UML lets you simulate hardware that is expensive or inconvenient to acquire, so you can test-run the applications or workloads you intend to run on that hardware. By doing so, you can make more informed decisions about both the hardware and the software.

Chapter 13. The Future of UML

Currently, a UML instance is a standard virtual machine, hard to distinguish from a Linux instance provided by any of the other virtualization technologies available. UML will continue to be a standard virtual machine, with a number of performance improvements. Some of these have been discussed in earlier chapters, so I'm not going to cover them here. Rather, I will talk about how UML is also going to change out of recognition. Being a real port of the Linux kernel that runs as a completely normal process gives UML capabilities not possessed by any other virtualization technology that provides virtual machines that aren't standard Linux processes.

We discussed part of this topic in Chapter 6, when we talked about humfs and its ability to store file metadata in a database. The capabilities presented there are more general than we have talked about. humfs is based on a UML filesystem called externfs, which imports host data into a UML instance as a filesystem. By writing plugin modules for externfs, such as humfs, anything on the host that even remotely resembles a filesystem can be imported into a UML instance as a mountable filesystem.

Similarly, external resources that don't resemble filesystems but do resemble processes could be imported, in a sense, into a UML instance as a process. The UML process would be a representative of the outside resource, and its activity and statistics would be represented as the activity and statistics of the UML process. Operations performed on the UML process, such as sending it signals or changing its priority, would be reflected back out to the outside in whatever way makes sense.

An extension of this idea is to import the internal state of an application into UML as a filesystem. This involves embedding a UML instance into the application and modifying the application sufficiently to provide access to its data from the captive UML instance through a filesystem. Doing so requires linking UML into the application so that the UML instance and the application share an address space, making it easy for them to share data.

It may not be obvious why this is useful, but it has potential that may turn out to be revolutionary for two major reasons.